This is the multi-page printable view of this section. Click here to print.

PG Admin

- PG Logical Replication Explained

- Query Optimization: The Macro Approach with pg_stat_statements

- Rescue Data with pg_filedump?

- Collation in PostgreSQL

- PostgreSQL Replica Identity Explained

- Slow Query Diagnosis

PG Logical Replication Explained

Logical Replication

Logical Replication is a method of replicating data objects and their changes based on the Replica Identity (typically primary keys) of data objects.

The term Logical Replication contrasts with Physical Replication, where physical replication uses exact block addresses and byte-by-byte copying, while logical replication allows fine-grained control over the replication process.

Logical replication is based on a Publication and Subscription model:

- A Publisher can have multiple publications, and a Subscriber can have multiple subscriptions.

- A publication can be subscribed to by multiple subscribers, while a subscription can only subscribe to one publisher, but can subscribe to multiple different publications from the same publisher.

Logical replication for a table typically works like this: The subscriber takes a snapshot of the publisher’s database and copies the existing data in the table. Once the data copy is complete, changes (inserts, updates, deletes, truncates) on the publisher are sent to the subscriber in real-time. The subscriber applies these changes in the same order, ensuring transactional consistency in logical replication. This approach is sometimes called transactional replication.

Typical use cases for logical replication include:

- Migration: Replication across different PostgreSQL versions and operating system platforms.

- CDC (Change Data Capture): Collecting incremental changes in a database (or a subset of it) and triggering custom logic on subscribers for these changes.

- Data Integration: Combining multiple databases into one, or splitting one database into multiple, for fine-grained integration and access control.

A logical subscriber behaves like a normal PostgreSQL instance (primary), and can also create its own publications and have its own subscribers.

If the logical subscriber is read-only, there will be no conflicts. However, if writes are performed on the subscriber’s subscription set, conflicts may occur.

Publication

A Publication can be defined on a physical replication primary. The node that creates the publication is called the Publisher.

A Publication is a collection of changes from a set of tables. It can also be viewed as a change set or replication set. Each publication can only exist in one Database.

Publications are different from Schemas and don’t affect how tables are accessed. (Whether a table is included in a publication or not doesn’t affect its access)

Currently, publications can only contain tables (i.e., indexes, sequences, materialized views are not published), and each table can be added to multiple publications.

Unless creating a publication for ALL TABLES, objects (tables) in a publication can only be explicitly added (via ALTER PUBLICATION ADD TABLE).

Publications can filter the types of changes required: any combination of INSERT, UPDATE, DELETE, and TRUNCATE, similar to trigger events. By default, all changes are published.

Replica Identity

A table included in a publication must have a Replica Identity, which is necessary to locate the rows that need to be updated on the subscriber side for UPDATE and DELETE operations.

By default, the Primary Key is the table’s replica identity. A UNIQUE NOT NULL index can also be used as a replica identity.

If there is no replica identity, it can be set to FULL, meaning the entire row is used as the replica identity. (An interesting case: multiple identical records can be handled correctly, as shown in later examples) Using FULL mode for replica identity is inefficient (because each row modification requires a full table scan on the subscriber, which can easily overwhelm the subscriber), so this configuration should only be used as a last resort. Using FULL mode for replica identity also has a limitation: the columns included in the replica identity on the subscriber’s table must either match the publisher or be fewer than on the publisher.

INSERT operations can always proceed regardless of the replica identity (because inserting a new record doesn’t require locating any existing records on the subscriber; while deletes and updates need to locate records through the replica identity). If a table without a replica identity is added to a publication with UPDATE and DELETE, subsequent UPDATE and DELETE operations will cause errors on the publisher.

The replica identity mode of a table can be checked in pg_class.relreplident and modified via ALTER TABLE.

ALTER TABLE tbl REPLICA IDENTITY

{ DEFAULT | USING INDEX index_name | FULL | NOTHING };

Although various combinations are possible, in practice, only three scenarios are viable:

- Table has a primary key, using the default

defaultreplica identity - Table has no primary key but has a non-null unique index, explicitly configured with

indexreplica identity - Table has neither primary key nor non-null unique index, explicitly configured with

fullreplica identity (very inefficient, only as a last resort) - All other cases cannot properly complete logical replication functionality. Insufficient information output may result in errors or may not.

- Special attention: If a table with

nothingreplica identity is included in logical replication, performing updates or deletes on it will cause errors on the publisher!

| Replica Identity Mode\Table Constraints | Primary Key(p) | Unique NOT NULL Index(u) | Neither(n) |

|---|---|---|---|

| default | Valid | x | x |

| index | x | Valid | x |

| full | Inefficient | Inefficient | Inefficient |

| nothing | xxxx | xxxx | xxxx |

Managing Publications

CREATE PUBLICATION is used to create a publication, DROP PUBLICATION to remove it, and ALTER PUBLICATION to modify it.

After a publication is created, tables can be dynamically added to or removed from it using ALTER PUBLICATION, and these operations are transactional.

CREATE PUBLICATION name

[ FOR TABLE [ ONLY ] table_name [ * ] [, ...]

| FOR ALL TABLES ]

[ WITH ( publication_parameter [= value] [, ... ] ) ]

ALTER PUBLICATION name ADD TABLE [ ONLY ] table_name [ * ] [, ...]

ALTER PUBLICATION name SET TABLE [ ONLY ] table_name [ * ] [, ...]

ALTER PUBLICATION name DROP TABLE [ ONLY ] table_name [ * ] [, ...]

ALTER PUBLICATION name SET ( publication_parameter [= value] [, ... ] )

ALTER PUBLICATION name OWNER TO { new_owner | CURRENT_USER | SESSION_USER }

ALTER PUBLICATION name RENAME TO new_name

DROP PUBLICATION [ IF EXISTS ] name [, ...];

publication_parameter mainly includes two options:

publish: Defines the types of change operations to publish, a comma-separated string, defaulting toinsert, update, delete, truncate.publish_via_partition_root: New option in PostgreSQL 13, if true, partitioned tables will use the root partition’s replica identity for logical replication.

Querying Publications

Publications can be queried using the psql meta-command \dRp.

# \dRp

Owner | All tables | Inserts | Updates | Deletes | Truncates | Via root

----------+------------+---------+---------+---------+-----------+----------

postgres | t | t | t | t | t | f

pg_publication Publication Definition Table

pg_publication contains the original publication definitions, with each record corresponding to a publication.

# table pg_publication;

oid | 20453

pubname | pg_meta_pub

pubowner | 10

puballtables | t

pubinsert | t

pubupdate | t

pubdelete | t

pubtruncate | t

pubviaroot | f

puballtables: Whether it includes all tablespubinsert|update|delete|truncate: Whether these operations are publishedpubviaroot: If this option is set, any partitioned table (leaf table) will use the top-level partitioned table’s replica identity. This allows treating the entire partitioned table as one table rather than a series of tables for publication.

pg_publication_tables Publication Content Table

pg_publication_tables is a view composed of pg_publication, pg_class, and pg_namespace, recording the table information included in publications.

postgres@meta:5432/meta=# table pg_publication_tables;

pubname | schemaname | tablename

-------------+------------+-----------------

pg_meta_pub | public | spatial_ref_sys

pg_meta_pub | public | t_normal

pg_meta_pub | public | t_unique

pg_meta_pub | public | t_tricky

Use pg_get_publication_tables to get the OIDs of subscribed tables based on the subscription name:

SELECT * FROM pg_get_publication_tables('pg_meta_pub');

SELECT p.pubname,

n.nspname AS schemaname,

c.relname AS tablename

FROM pg_publication p,

LATERAL pg_get_publication_tables(p.pubname::text) gpt(relid),

pg_class c

JOIN pg_namespace n ON n.oid = c.relnamespace

WHERE c.oid = gpt.relid;

Similarly, pg_publication_rel provides similar information but from a many-to-many OID correspondence perspective, containing raw data.

oid | prpubid | prrelid

-------+---------+---------

20414 | 20413 | 20397

20415 | 20413 | 20400

20416 | 20413 | 20391

20417 | 20413 | 20394

It’s important to note the difference between these two: When publishing for ALL TABLES, pg_publication_rel won’t have specific table OIDs, but pg_publication_tables can query the actual list of tables included in logical replication. Therefore, pg_publication_tables should typically be used as the reference.

When creating a subscription, the database first modifies the pg_publication catalog, then fills in the publication table information into pg_publication_rel.

Subscription

A Subscription is the downstream of logical replication. The node that defines the subscription is called the Subscriber.

A subscription defines: how to connect to another database, and which publications from the target publisher to subscribe to.

A logical subscriber behaves like a normal PostgreSQL instance (primary), and can also create its own publications and have its own subscribers.

Each subscriber receives changes through a Replication Slot, and during the initial data replication phase, additional temporary replication slots may be required.

A logical replication subscription can serve as a synchronous replication standby, with the standby’s name defaulting to the subscription name, or a different name can be used by setting application_name in the connection information.

Only superusers can dump subscription definitions using pg_dump, as only superusers can access the pg_subscription view. Regular users attempting to dump will skip and print a warning message.

Logical replication doesn’t replicate DDL changes, so tables in the publication set must already exist on the subscriber side. Only changes to regular tables are replicated; views, materialized views, sequences, and indexes are not replicated.

Tables on the publisher and subscriber are matched by their fully qualified names (e.g., public.table), and replicating changes to a table with a different name is not supported.

Columns on the publisher and subscriber are matched by name. Column order doesn’t matter, and data types don’t have to be identical, as long as the text representation of the two columns is compatible, meaning the text representation of the data can be converted to the target column’s type. The subscriber’s table can contain columns not present on the publisher, and these new columns will be filled with default values.

Managing Subscriptions

CREATE SUBSCRIPTION is used to create a subscription, DROP SUBSCRIPTION to remove it, and ALTER SUBSCRIPTION to modify it.

After a subscription is created, it can be paused and resumed at any time using ALTER SUBSCRIPTION.

Removing and recreating a subscription will result in loss of synchronization information, meaning the relevant data needs to be resynchronized.

CREATE SUBSCRIPTION subscription_name

CONNECTION 'conninfo'

PUBLICATION publication_name [, ...]

[ WITH ( subscription_parameter [= value] [, ... ] ) ]

ALTER SUBSCRIPTION name CONNECTION 'conninfo'

ALTER SUBSCRIPTION name SET PUBLICATION publication_name [, ...] [ WITH ( set_publication_option [= value] [, ... ] ) ]

ALTER SUBSCRIPTION name REFRESH PUBLICATION [ WITH ( refresh_option [= value] [, ... ] ) ]

ALTER SUBSCRIPTION name ENABLE

ALTER SUBSCRIPTION name DISABLE

ALTER SUBSCRIPTION name SET ( subscription_parameter [= value] [, ... ] )

ALTER SUBSCRIPTION name OWNER TO { new_owner | CURRENT_USER | SESSION_USER }

ALTER SUBSCRIPTION name RENAME TO new_name

DROP SUBSCRIPTION [ IF EXISTS ] name;

subscription_parameter defines some options for the subscription, including:

copy_data(bool): Whether to copy data after replication starts, defaults to truecreate_slot(bool): Whether to create a replication slot on the publisher, defaults to trueenabled(bool): Whether to enable the subscription, defaults to trueconnect(bool): Whether to attempt to connect to the publisher, defaults to true. Setting to false will force the above options to false.synchronous_commit(bool): Whether to enable synchronous commit, reporting progress information to the primary.slot_name: The name of the replication slot associated with the subscription. Setting to empty will disassociate the subscription from the replication slot.

Managing Replication Slots

Each active subscription receives changes from the remote publisher through a replication slot.

Typically, this remote replication slot is automatically managed, created automatically during CREATE SUBSCRIPTION and deleted during DROP SUBSCRIPTION.

In specific scenarios, it may be necessary to operate on the subscription and the underlying replication slot separately:

-

When creating a subscription, if the required replication slot already exists. In this case, you can associate with the existing replication slot using

create_slot = false. -

When creating a subscription, if the remote host is unreachable or its state is unclear, you can avoid accessing the remote host using

connect = false. This is whatpg_dumpdoes. In this case, you must manually create the replication slot on the remote side before enabling the subscription locally. -

When removing a subscription, if you need to retain the replication slot. This typically happens when the subscriber is being moved to another machine where you want to restart the subscription. In this case, you need to first disassociate the subscription from the replication slot using

ALTER SUBSCRIPTION. -

When removing a subscription, if the remote host is unreachable. In this case, you need to disassociate the replication slot from the subscription before deleting the subscription.

If the remote instance is no longer in use, it’s fine. However, if the remote instance is only temporarily unreachable, you should manually delete its replication slot; otherwise, it will continue to retain WAL and may cause the disk to fill up.

Querying Subscriptions

Subscriptions can be queried using the psql meta-command \dRs.

# \dRs

Name | Owner | Enabled | Publication

--------------+----------+---------+----------------

pg_bench_sub | postgres | t | {pg_bench_pub}

pg_subscription Subscription Definition Table

Each logical subscription has one record. Note that this view is cluster-wide, and each database can see the subscription information for the entire cluster.

Only superusers can access this view because it contains plaintext passwords (connection information).

oid | 20421

subdbid | 19356

subname | pg_test_sub

subowner | 10

subenabled | t

subconninfo | host=10.10.10.10 user=replicator password=DBUser.Replicator dbname=meta

subslotname | pg_test_sub

subsynccommit | off

subpublications | {pg_meta_pub}

subenabled: Whether the subscription is enabledsubconninfo: Hidden from regular users because it contains sensitive information.subslotname: The name of the replication slot used by the subscription, also used as the logical replication origin name for deduplication.subpublications: List of publication names subscribed to.- Other status information: Whether synchronous commit is enabled, etc.

pg_subscription_rel Subscription Content Table

pg_subscription_rel records information about each table in the subscription, including status and progress.

srrelid: OID of the relation in the subscriptionsrsubstate: State of the relation in the subscription:iinitializing,dcopying data,ssynchronization completed,rnormal replication.srsublsn: When ini|dstate, it’s empty. When ins|rstate, it’s the LSN position on the remote side.

When Creating a Subscription

When a new subscription is created, the following operations are performed in sequence:

- Store the publication information in the

pg_subscriptioncatalog, including connection information, replication slot, publication names, and some configuration options. - Connect to the publisher, check replication permissions (note that it does not check if the corresponding publication exists),

- Create a logical replication slot:

pg_create_logical_replication_slot(name, 'pgoutput') - Register the tables in the replication set to the subscriber’s

pg_subscription_relcatalog. - Execute the initial snapshot synchronization. Note that existing data in the subscriber’s tables is not deleted.

Replication Conflicts

Logical replication behaves like normal DML operations, updating data even if it has been locally changed on the user node. If the replicated data violates any constraints, replication stops, a phenomenon known as conflicts.

When replicating UPDATE or DELETE operations, missing data (i.e., data to be updated/deleted no longer exists) doesn’t cause conflicts, and such operations are simply skipped.

Conflicts cause errors and abort logical replication. The logical replication management process will retry at 5-second intervals. Conflicts don’t block SQL operations on tables in the subscription set on the subscriber side. Details about conflicts can be found in the user’s server logs, and conflicts must be manually resolved by the user.

Possible Conflicts in Logs

| Conflict Mode | Replication Process | Output Log |

|---|---|---|

| Missing UPDATE/DELETE Object | Continue | No Output |

| Table/Row Lock Wait | Wait | No Output |

| Violation of Primary Key/Unique/Check Constraints | Abort | Output |

| Target Table/Column Missing | Abort | Output |

| Cannot Convert Data to Target Column Type | Abort | Output |

To resolve conflicts, you can either modify the data on the subscriber side to avoid conflicts with incoming changes, or skip transactions that conflict with existing data.

Use the subscription’s node_name and LSN position to call the pg_replication_origin_advance() function to skip transactions. The current ORIGIN position can be seen in the pg_replication_origin_status system view.

Limitations

Logical replication currently has the following limitations or missing features. These issues may be resolved in future versions.

Database schemas and DDL commands are not replicated. Existing schemas can be manually replicated using pg_dump --schema-only, and incremental schema changes need to be manually kept in sync (the schemas on both publisher and subscriber don’t need to be absolutely identical). Logical replication remains reliable for online DDL changes: after executing DDL changes in the publisher database, replicated data reaches the subscriber but replication stops due to table schema mismatch. After updating the subscriber’s schema, replication continues. In many cases, executing changes on the subscriber first can avoid intermediate errors.

Sequence data is not replicated. The data in identity columns served by sequences and SERIAL types is replicated as part of the table, but the sequences themselves remain at their initial values on the subscriber. If the subscriber is used as a read-only database, this is usually fine. However, if you plan to perform some form of switchover or failover to the subscriber database, you need to update the sequences to their latest values, either by copying the current data from the publisher (perhaps using pg_dump -t *seq*), or by determining a sufficiently high value from the table’s data content (e.g., max(id)+1000000). Otherwise, if you perform operations that obtain sequence values as identities on the new database, conflicts are likely to occur.

Logical replication supports replicating TRUNCATE commands, but special care is needed when TRUNCATE involves a group of tables linked by foreign keys. When executing a TRUNCATE operation, the group of associated tables on the publisher (through explicit listing or cascade association) will all be TRUNCATEd, but on the subscriber, tables not in the subscription set won’t be TRUNCATEd. This is logically reasonable because logical replication shouldn’t affect tables outside the replication set. But if there are tables not in the subscription set that reference tables in the subscription set through foreign keys, the TRUNCATE operation will fail.

Large objects are not replicated

Only tables can be replicated (including partitioned tables). Attempting to replicate other types of tables will result in errors (views, materialized views, foreign tables, unlogged tables). Specifically, only tables with pg_class.relkind = 'r' can participate in logical replication.

When replicating partitioned tables, replication is done by default at the child table level. By default, changes are triggered according to the leaf partitions of the partitioned table, meaning that every partition child table on the publisher needs to exist on the subscriber (of course, this partition child table on the subscriber doesn’t have to be a partition child table, it could be a partition parent table itself, or a regular table). The publication can declare whether to use the replica identity from the partition root table instead of the replica identity from the partition leaf table. This is a new feature in PostgreSQL 13 and can be specified through the publish_via_partition_root option when creating the publication.

Trigger behavior is different. Row-level triggers fire, but UPDATE OF cols type triggers don’t. Statement-level triggers only fire during initial data copying.

Logging behavior is different. Even with log_statement = 'all', SQL statements generated by replication won’t be logged.

Bidirectional replication requires extreme caution: It’s possible to have mutual publication and subscription as long as the table sets on both sides don’t overlap. But once there’s an intersection of tables, WAL infinite loops will occur.

Replication within the same instance: Logical replication within the same instance requires special caution. You must manually create logical replication slots and use existing logical replication slots when creating subscriptions, otherwise it will hang.

Only possible on primary: Currently, logical decoding from physical replication standbys is not supported, and replication slots cannot be created on standbys, so standbys cannot be publishers. But this issue may be resolved in the future.

Architecture

Logical replication begins by taking a snapshot of the publisher’s database and copying the existing data in tables based on this snapshot. Once the copy is complete, changes (inserts, updates, deletes, etc.) on the publisher are sent to the subscriber in real-time.

Logical replication uses an architecture similar to physical replication, implemented through a walsender and apply process. The publisher’s walsender process loads the logical decoding plugin (pgoutput) and begins logical decoding of WAL logs. The Logical Decoding Plugin reads changes from WAL, filters changes according to the publication definition, transforms changes into a specific format, and transmits them using the logical replication protocol. Data is transmitted to the subscriber’s apply process using the streaming replication protocol. This process maps changes to local tables when received and reapplies these changes in transaction order.

Initial Snapshot

During initialization and data copying, tables on the subscriber side are handled by a special apply process. This process creates its own temporary replication slot and copies the existing data in tables.

Once data copying is complete, the table enters synchronization mode (pg_subscription_rel.srsubstate = 's'), which ensures that the main apply process can apply changes that occurred during the data copying period using standard logical replication methods. Once synchronization is complete, control of table replication is transferred back to the main apply process, returning to normal replication mode.

Process Structure

The publisher creates a corresponding walsender process for each connection from the subscriber, sending decoded WAL logs. On the subscriber side, it creates an apply process to receive and apply changes.

Replication Slots

When creating a subscription, a logical replication slot is created on the publisher. This slot ensures that WAL logs are retained until they are successfully applied on the subscriber.

Logical Decoding

Logical decoding is the process of converting WAL records into a format that can be understood by logical replication. The pgoutput plugin is the default logical decoding plugin in PostgreSQL.

Synchronous Commit

Synchronous commit in logical replication is completed through SIGUSR1 communication between Backend and Walsender.

Temporary Data

Temporary data from logical decoding is written to disk as local log snapshots. When the walsender receives a SIGUSR1 signal from the walwriter, it reads WAL logs and generates corresponding logical decoding snapshots. These snapshots are deleted when transmission ends.

The file location is: $PGDATA/pg_logical/snapshots/{LSN Upper}-{LSN Lower}.snap

Monitoring

Logical replication uses an architecture similar to physical stream replication, so monitoring a logical replication publisher node is not much different from monitoring a physical replication primary.

Subscriber monitoring information can be obtained through the pg_stat_subscription view.

pg_stat_subscription Subscription Statistics Table

Each active subscription will have at least one record in this view, representing the Main Worker (responsible for applying logical logs).

The Main Worker has relid = NULL. If there are processes responsible for initial data copying, they will also have a record here, with relid being the table being copied.

subid | 20421

subname | pg_test_sub

pid | 5261

relid | NULL

received_lsn | 0/2A4F6B8

last_msg_send_time | 2021-02-22 17:05:06.578574+08

last_msg_receipt_time | 2021-02-22 17:05:06.583326+08

latest_end_lsn | 0/2A4F6B8

latest_end_time | 2021-02-22 17:05:06.578574+08

received_lsn: The most recently received log position.latest_end_lsn: The last LSN position reported to the walsender, i.e., theconfirmed_flush_lsnon the primary. However, this value is not updated very frequently.

Typically, an active subscription will have an apply process running, while disabled or crashed subscriptions won’t have records in this view. During initial synchronization, synchronized tables will have additional worker process records.

pg_replication_slot Replication Slots

postgres@meta:5432/meta=# table pg_replication_slots ;

-[ RECORD 1 ]-------+------------

slot_name | pg_test_sub

plugin | pgoutput

slot_type | logical

datoid | 19355

database | meta

temporary | f

active | t

active_pid | 89367

xmin | NULL

catalog_xmin | 1524

restart_lsn | 0/2A08D40

confirmed_flush_lsn | 0/2A097F8

wal_status | reserved

safe_wal_size | NULL

The replication slots view contains both logical and physical replication slots. The main characteristics of logical replication slots are:

pluginfield is not empty, identifying the logical decoding plugin used. Logical replication defaults to using thepgoutputplugin.slot_type = logical, while physical replication slots are of typephysical.datoidanddatabasefields are not empty because physical replication is associated with the cluster, while logical replication is associated with the database.

Logical subscribers also appear as standard replication standbys in the pg_stat_replication view.

pg_replication_origin Replication Origin

Replication origin

table pg_replication_origin_status;

-[ RECORD 1 ]-----------

local_id | 1

external_id | pg_19378

remote_lsn | 0/0

local_lsn | 0/6BB53640

local_id: The local ID of the replication origin, represented efficiently in 2 bytes.external_id: The ID of the replication origin, which can be referenced across nodes.remote_lsn: The most recent commit position on the source.local_lsn: The LSN of locally persisted commit records.

Detecting Replication Conflicts

The most reliable method of detection is always from the logs on both publisher and subscriber sides. When replication conflicts occur, you can see replication connection interruptions on the publisher:

LOG: terminating walsender process due to replication timeout

LOG: starting logical decoding for slot "pg_test_sub"

DETAIL: streaming transactions committing after 0/xxxxx, reading WAL from 0/xxxx

While on the subscriber side, you can see the specific cause of the replication conflict, for example:

logical replication worker PID 4585 exited with exit code 1

ERROR: duplicate key value violates unique constraint "pgbench_tellers_pkey","Key (tid)=(9) already exists.",,,,"COPY pgbench_tellers, line 31",,,,"","logical replication worker"

Additionally, some monitoring metrics can reflect the state of logical replication:

For example: pg_replication_slots.confirmed_flush_lsn consistently lagging behind pg_cureent_wal_lsn. Or significant growth in pg_stat_replication.flush_ag/write_lag.

Security

Tables participating in subscriptions must have their Ownership and Trigger permissions controlled by roles trusted by the superuser (otherwise, modifying these tables could cause logical replication to stop).

On the publisher node, if untrusted users have table creation permissions, publications should explicitly specify table names rather than using the wildcard ALL TABLES. That is, FOR ALL TABLES should only be used when the superuser trusts all users who have permission to create tables (non-temporary) on either the publisher or subscriber side.

The user used for replication connections must have the REPLICATION permission (or be a SUPERUSER). If this role lacks SUPERUSER and BYPASSRLS, row security policies on the publisher may be executed. If the table owner sets row-level security policies after replication starts, this configuration may cause replication to stop directly rather than the policy taking effect. The user must have LOGIN permission, and HBA rules must allow access.

To be able to replicate initial table data, the role used for replication connections must have SELECT permission on the published tables (or be a superuser).

Creating a publication requires CREATE permission in the database, and creating a FOR ALL TABLES publication requires superuser permission.

Adding tables to a publication requires owner permission on the tables.

Creating a subscription requires superuser permission because the subscription’s apply process runs with superuser privileges in the local database.

Permissions are only checked when establishing the replication connection, not when reading each change record on the publisher side, nor when applying each record on the subscriber side.

Configuration Options

Logical replication requires some configuration options to work properly.

On the publisher side, wal_level must be set to logical, max_replication_slots needs to be at least the number of subscriptions + the number used for table data synchronization. max_wal_senders needs to be at least max_replication_slots + the number reserved for physical replication.

On the subscriber side, max_replication_slots also needs to be set, with max_replication_slots needing to be at least the number of subscriptions.

max_logical_replication_workers needs to be configured to at least the number of subscriptions, plus some for data synchronization worker processes.

Additionally, max_worker_processes needs to be adjusted accordingly, at least to max_logical_replication_worker + 1. Note that some extensions and parallel queries will also use connections from the worker process pool.

Configuration Parameter Example

For a 64-core machine with 1-2 publications and subscriptions, up to 6 synchronization worker processes, and up to 8 physical standbys, a sample configuration might look like this:

First, determine the number of slots: 2 subscriptions, 6 synchronization worker processes, 8 physical standbys, so configure for 16. Sender = Slot + Physical Replica = 24.

Limit synchronization worker processes to 6, 2 subscriptions, so set the total logical replication worker processes to 8.

wal_level: logical # logical

max_worker_processes: 64 # default 8 -> 64, set to CPU CORE 64

max_parallel_workers: 32 # default 8 -> 32, limit by max_worker_processes

max_parallel_maintenance_workers: 16 # default 2 -> 16, limit by parallel worker

max_parallel_workers_per_gather: 0 # default 2 -> 0, disable parallel query on OLTP instance

# max_parallel_workers_per_gather: 16 # default 2 -> 16, enable parallel query on OLAP instance

max_wal_senders: 24 # 10 -> 24

max_replication_slots: 16 # 10 -> 16

max_logical_replication_workers: 8 # 4 -> 8, 6 sync worker + 1~2 apply worker

max_sync_workers_per_subscription: 6 # 2 -> 6, 6 sync worker

Quick Setup

First, set the configuration option wal_level = logical on the publisher side. This parameter requires a restart to take effect. Other parameters’ default values don’t affect usage.

Then create a replication user and add pg_hba.conf configuration items to allow external access. A typical configuration is:

CREATE USER replicator REPLICATION BYPASSRLS PASSWORD 'DBUser.Replicator';

Note that logical replication users need SELECT permission. In Pigsty, replicator has already been granted the dbrole_readonly role.

host all replicator 0.0.0.0/0 md5

host replicator replicator 0.0.0.0/0 md5

Then execute in the publisher’s database:

CREATE PUBLICATION mypub FOR TABLE <tablename>;

Then execute in the subscriber’s database:

CREATE SUBSCRIPTION mysub CONNECTION 'dbname=<pub_db> host=<pub_host> user=replicator' PUBLICATION mypub;

The above configuration will start replication, first copying the initial data of the tables, then beginning to synchronize incremental changes.

Sandbox Example

Using the Pigsty standard 4-node two-cluster sandbox as an example, there are two database clusters pg-meta and pg-test. Now we’ll use pg-meta-1 as the publisher and pg-test-1 as the subscriber.

PGSRC='postgres://dbuser_admin@meta-1/meta' # Publisher

PGDST='postgres://dbuser_admin@node-1/test' # Subscriber

pgbench -is100 ${PGSRC} # Initialize Pgbench on publisher

pg_dump -Oscx -t pgbench* -s ${PGSRC} | psql ${PGDST} # Sync table structure on subscriber

# Create a **publication** on the publisher, adding default `pgbench` related tables to the publication set.

psql ${PGSRC} -AXwt <<-'EOF'

CREATE PUBLICATION "pg_meta_pub" FOR TABLE

pgbench_accounts,pgbench_branches,pgbench_history,pgbench_tellers;

EOF

# Create a **subscription** on the subscriber, subscribing to the publisher's publication.

psql ${PGDST} <<-'EOF'

CREATE SUBSCRIPTION pg_test_sub

CONNECTION 'host=10.10.10.10 dbname=meta user=replicator'

PUBLICATION pg_meta_pub;

EOF

Replication Process

After the subscription creation, if everything is normal, logical replication will automatically start, executing the replication state machine logic for each table in the subscription.

As shown in the following figure.

When all tables are completed and enter r (ready) state, the logical replication’s existing data synchronization stage is completed, and the publisher and subscriber sides enter synchronization state as a whole.

Therefore, logically speaking, there are two state machines: Table Level Replication Small State Machine and Global Replication Large State Machine. Each Sync Worker is responsible for a small state machine on one table, while an Apply Worker is responsible for a logical replication large state machine.

Logical Replication State Machine

Logical replication has two Workers: Sync and Apply. Sync

Therefore, logical replication is logically divided into two parts: Each Table Independently Replicating,When the replication progress catches up to the latest position, by

When creating or refreshing a subscription, the table will be added to the subscription set, and each table in the subscription set will have a corresponding record in the pg_subscription_rel view, showing the current replication status of this table. The newly added table is initially in i,即initialize,Initial State.

If the subscription’s copy_data option is true (default),And there is an idle Worker in the worker pool, PostgreSQL will allocate a synchronization worker for this table, synchronize the existing data on this table, and the table state enters d,即Copying Data. Synchronizing table data is similar to basebackup for database cluster, Sync Worker will create a temporary replication slot on the publisher, get the snapshot of the table through COPY, and complete basic data synchronization.

When the basic data copy of the table is completed, the table will enter sync mode, that is, Data Synchronization, the synchronization process will catch up with incremental changes during synchronization. When the catch-up is complete, the synchronization process will mark this table as r (ready) state, turn over the management of changes to the logical replication main Apply process, indicating that this table is in normal replication.

2.4 Waiting for Logical Replication Synchronization

After creating a subscription, first must monitor Ensure no errors are generated on both publisher and subscriber sides’ database logs.

2.4.1 Logical Replication State Machine

2.4.2 Synchronization Progress Tracking

Data synchronization (d) stage may take some time, depending on network card, network, disk, table size and distribution, logical replication synchronization worker quantity factors.

As a reference, 1TB database, 20 tables, containing 250GB large table, dual 10G network card, under the responsibility of 6 data synchronization workers, it takes about 6~8 hours to complete replication.

During data synchronization, each table synchronization task will create a temporary replication slot on the source end. Please ensure that logical replication initial synchronization period does not put unnecessary write pressure on the source primary, so as not to cause WAL to burst disk.

pg_stat_replication,pg_replication_slots,subscriber’s pg_stat_subscription,pg_subscription_rel provide logical replication status related information, need to pay attention.

Query Optimization: The Macro Approach with pg_stat_statements

In production databases, slow queries not only impact end-user experience but also waste system resources, increase resource saturation, cause deadlocks and transaction conflicts, add pressure to database connections, and lead to replication lag. Therefore, query optimization is one of the core responsibilities of DBAs.

There are two distinct approaches to query optimization:

Macro Optimization: Analyze the overall workload, break it down, and identify and improve the worst-performing components from top to bottom.

Micro Optimization: Analyze and improve specific queries, which requires slow query logging, mastering EXPLAIN, and understanding execution plans.

Today, let’s focus on the former. Macro optimization has three main objectives:

Reduce Resource Consumption: Lower the risk of resource saturation, optimize CPU/memory/IO, typically targeting total query execution time/IO.

Improve User Experience: The most common optimization goal, typically measured by reducing average query response time in OLTP systems.

Balance Workload: Ensure proper resource usage/performance ratios between different query groups.

The key to achieving these goals lies in data support, but where does this data come from?

— pg_stat_statements!

The Extension: PGSS

pg_stat_statements, hereafter referred to as PGSS, is the core tool for implementing the macro approach.

PGSS is developed by the PostgreSQL Global Development Group, distributed as a first-party extension alongside the database kernel. It provides methods for tracking SQL query-level metrics.

Among the many PostgreSQL extensions, if there’s one that’s “essential”, I would unhesitatingly answer: PGSS. This is why in Pigsty, we prefer to “take matters into our own hands” and enable this extension by default, along with auto_explain for micro-optimization.

PGSS needs to be explicitly loaded in shared_preload_library and created in the database via CREATE EXTENSION. After creating the extension, you can access query statistics through the pg_stat_statements view.

In PGSS, each query type (i.e., queries with the same execution plan after variable extraction) is assigned a query ID, followed by call count, total execution time, and various other metrics. The complete schema definition is as follows (PG15+):

CREATE TABLE pg_stat_statements

(

userid OID, -- (Label) OID of user executing this statement

dbid OID, -- (Label) OID of database containing this statement

toplevel BOOL, -- (Label) Whether this is a top-level SQL statement

queryid BIGINT, -- (Label) Query ID: hash of normalized query

query TEXT, -- (Label) Text of normalized query statement

plans BIGINT, -- (Counter) Number of times this statement was planned

total_plan_time FLOAT, -- (Counter) Total time spent planning this statement

min_plan_time FLOAT, -- (Gauge) Minimum planning time

max_plan_time FLOAT, -- (Gauge) Maximum planning time

mean_plan_time FLOAT, -- (Gauge) Average planning time

stddev_plan_time FLOAT, -- (Gauge) Standard deviation of planning time

calls BIGINT, -- (Counter) Number of times this statement was executed

total_exec_time FLOAT, -- (Counter) Total time spent executing this statement

min_exec_time FLOAT, -- (Gauge) Minimum execution time

max_exec_time FLOAT, -- (Gauge) Maximum execution time

mean_exec_time FLOAT, -- (Gauge) Average execution time

stddev_exec_time FLOAT, -- (Gauge) Standard deviation of execution time

rows BIGINT, -- (Counter) Total rows returned by this statement

shared_blks_hit BIGINT, -- (Counter) Total shared buffer blocks hit

shared_blks_read BIGINT, -- (Counter) Total shared buffer blocks read

shared_blks_dirtied BIGINT, -- (Counter) Total shared buffer blocks dirtied

shared_blks_written BIGINT, -- (Counter) Total shared buffer blocks written to disk

local_blks_hit BIGINT, -- (Counter) Total local buffer blocks hit

local_blks_read BIGINT, -- (Counter) Total local buffer blocks read

local_blks_dirtied BIGINT, -- (Counter) Total local buffer blocks dirtied

local_blks_written BIGINT, -- (Counter) Total local buffer blocks written to disk

temp_blks_read BIGINT, -- (Counter) Total temporary buffer blocks read

temp_blks_written BIGINT, -- (Counter) Total temporary buffer blocks written to disk

blk_read_time FLOAT, -- (Counter) Total time spent reading blocks

blk_write_time FLOAT, -- (Counter) Total time spent writing blocks

wal_records BIGINT, -- (Counter) Total number of WAL records generated

wal_fpi BIGINT, -- (Counter) Total number of WAL full page images generated

wal_bytes NUMERIC, -- (Counter) Total number of WAL bytes generated

jit_functions BIGINT, -- (Counter) Number of JIT-compiled functions

jit_generation_time FLOAT, -- (Counter) Total time spent generating JIT code

jit_inlining_count BIGINT, -- (Counter) Number of times functions were inlined

jit_inlining_time FLOAT, -- (Counter) Total time spent inlining functions

jit_optimization_count BIGINT, -- (Counter) Number of times queries were JIT-optimized

jit_optimization_time FLOAT, -- (Counter) Total time spent on JIT optimization

jit_emission_count BIGINT, -- (Counter) Number of times code was JIT-emitted

jit_emission_time FLOAT, -- (Counter) Total time spent on JIT emission

PRIMARY KEY (userid, dbid, queryid, toplevel)

);

PGSS View SQL Definition (PG 15+ version)

PGSS has some limitations: First, currently executing queries are not included in these statistics and need to be viewed from pg_stat_activity. Second, failed queries (e.g., statements canceled due to statement_timeout) are not counted in these statistics — this is a problem for error analysis, not query optimization.

Finally, the stability of the query identifier queryid requires special attention: When the database binary version and system data directory are identical, the same query type will have the same queryid (i.e., on physical replication primary and standby, query types have the same queryid by default), but this is not the case for logical replication. However, users should not rely too heavily on this property.

Raw Data

The columns in the PGSS view can be categorized into three types:

Descriptive Label Columns: Query ID (queryid), database ID (dbid), user (userid), a top-level query flag, and normalized query text (query).

Measured Metrics (Gauge): Eight statistical columns related to minimum, maximum, mean, and standard deviation, prefixed with min, max, mean, stddev, and suffixed with plan_time and exec_time.

Cumulative Metrics (Counter): All other metrics except the above eight columns and label columns, such as calls, rows, etc. The most important and useful metrics are in this category.

First, let’s explain queryid: queryid is the hash value of a normalized query after parsing and constant stripping, so it can be used to identify the same query type. Different query statements may have the same queryid (same structure after normalization), and the same query statement may have different queryids (e.g., due to different search_path, leading to different actual tables being queried).

The same query might be executed by different users in different databases. Therefore, in the PGSS view, the four label columns queryid, dbid, userid, and toplevel together form the “primary key” that uniquely identifies a record.

For metric columns, measured metrics (GAUGE) are mainly the eight statistics related to execution time and planning time. However, users cannot effectively control the statistical range of these metrics, so their practical value is limited.

The truly important metrics are cumulative metrics (Counter), such as:

calls: Number of times this query group was called.

total_exec_time + total_plan_time: Total time spent by the query group.

rows: Total rows returned by the query group.

shared_blks_hit + shared_blks_read: Total number of buffer pool hit and read operations.

wal_bytes: Total WAL bytes generated by queries in this group.

blk_read_time and blk_write_time: Total time spent on block I/O operations.

Here, the most meaningful metrics are calls and total_exec_time, which can be used to calculate the query group’s core metrics QPS (throughput) and RT (latency/response time), but other metrics are also valuable references.

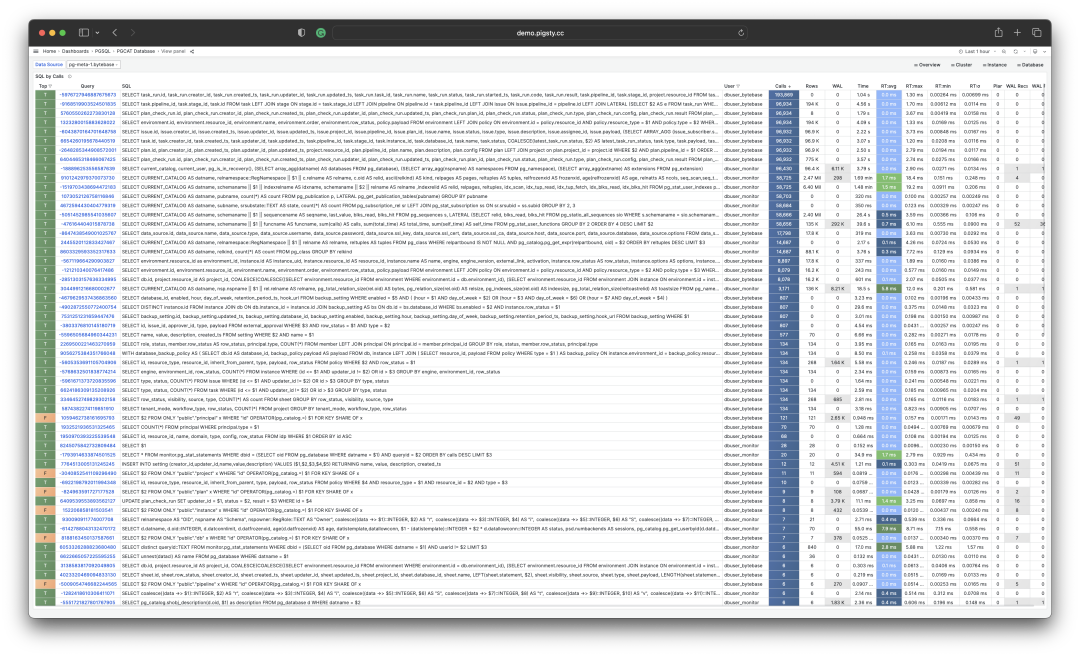

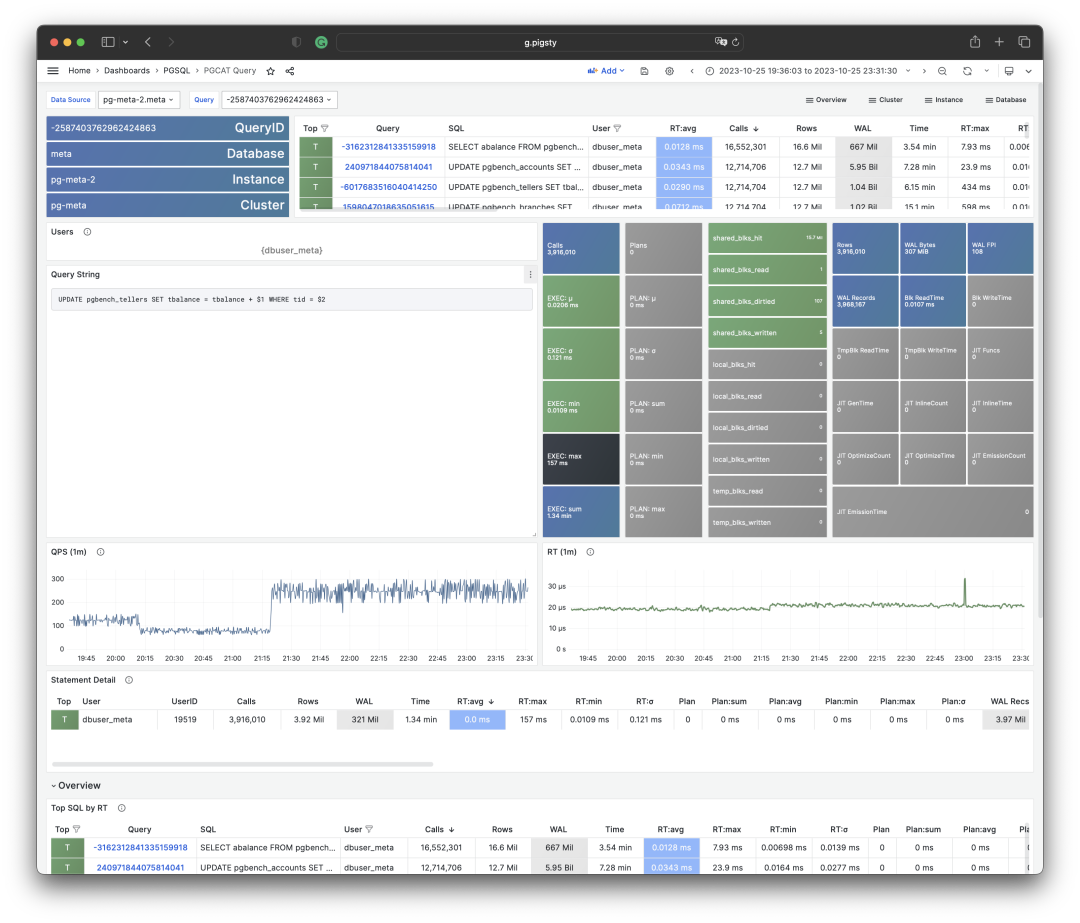

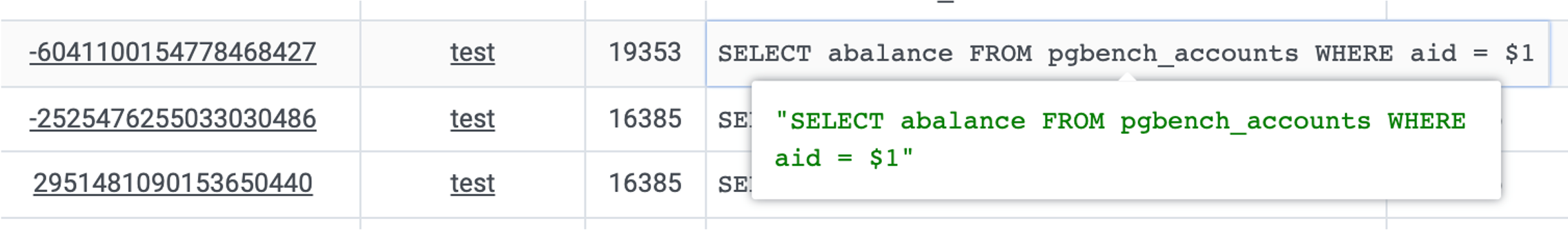

Visualization of a query group snapshot from the PGSS view

To interpret cumulative metrics, data from a single point in time is insufficient. We need to compare at least two snapshots to draw meaningful conclusions.

As a special case, if your area of interest happens to be from the beginning of the statistical period (usually when the extension was enabled) to the present, then you indeed don’t need to compare “two snapshots”. But users’ time granularity of interest is usually not this coarse, often being in minutes, hours, or days.

Calculating historical time-series metrics based on multiple PGSS query group snapshots

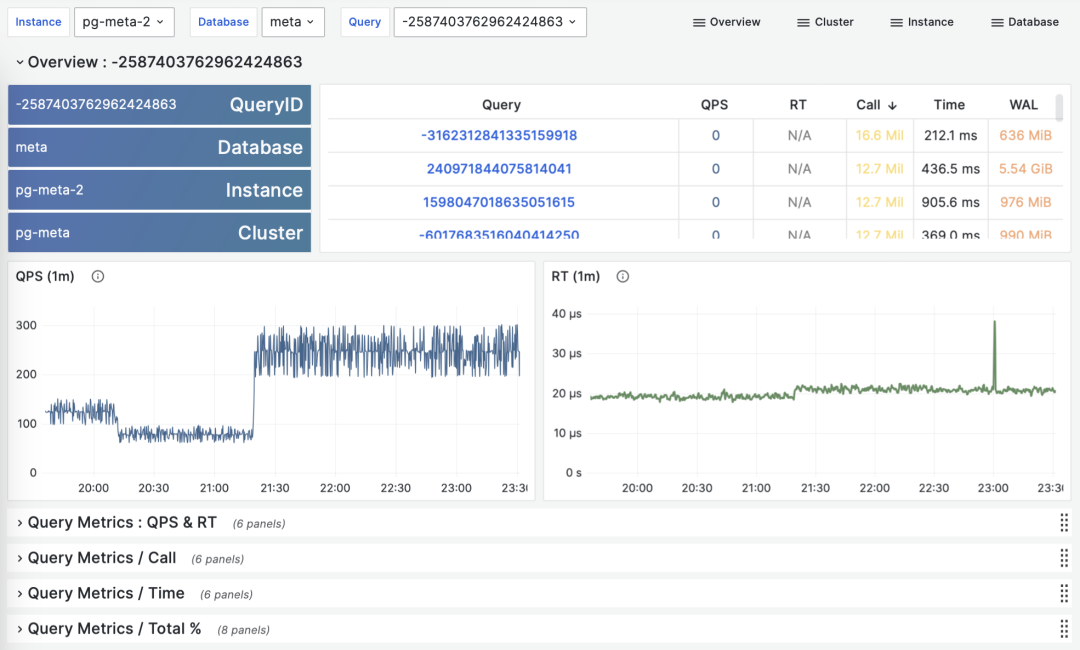

Fortunately, tools like Pigsty monitoring system regularly (default every 10s) capture snapshots of top queries (Top256 by execution time). With many different types of cumulative metrics (Metrics) at different time points, we can calculate three important derived metrics for any cumulative metric:

dM/dt: The time derivative of metric M, i.e., the increment per second.

dM/dc: The derivative of metric M with respect to call count, i.e., the average increment per call.

%M: The percentage of metric M in the entire workload.

These three types of metrics correspond exactly to the three objectives of macro optimization. The time derivative dM/dt reveals resource usage per second, typically used for the objective of reducing resource consumption. The call derivative dM/dc reveals resource usage per call, typically used for the objective of improving user experience. The percentage metric %M shows the proportion of a query group in the entire workload, typically used for the objective of balancing workload.

Time Derivatives

Let’s first look at the first type of metric: time derivatives. Here, we can use metrics M including: calls, total_exec_time, rows, wal_bytes, shared_blks_hit + shared_blks_read, and blk_read_time + blk_write_time. Other metrics are also valuable references, but let’s start with the most important ones.

Visualization of time derivative metrics dM/dt

The calculation of these metrics is quite simple:

- First, calculate the difference in metric value M between two snapshots: M2 - M1

- Then, calculate the time difference between two snapshots: t2 - t1

- Finally, calculate (M2 - M1) / (t2 - t1)

Production environments typically use sampling intervals of 5s, 10s, 15s, 30s, 60s. For workload analysis, 1m, 5m, 15m are commonly used as analysis window sizes.

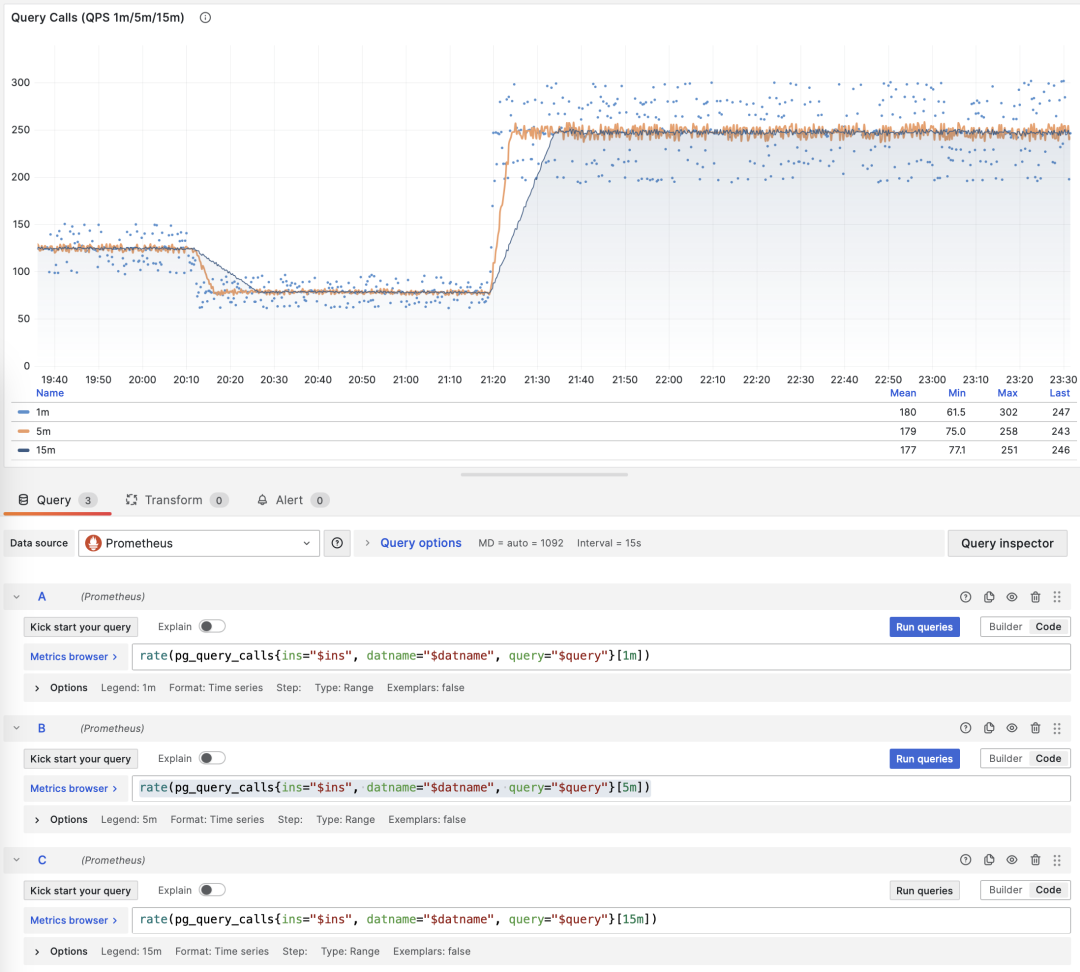

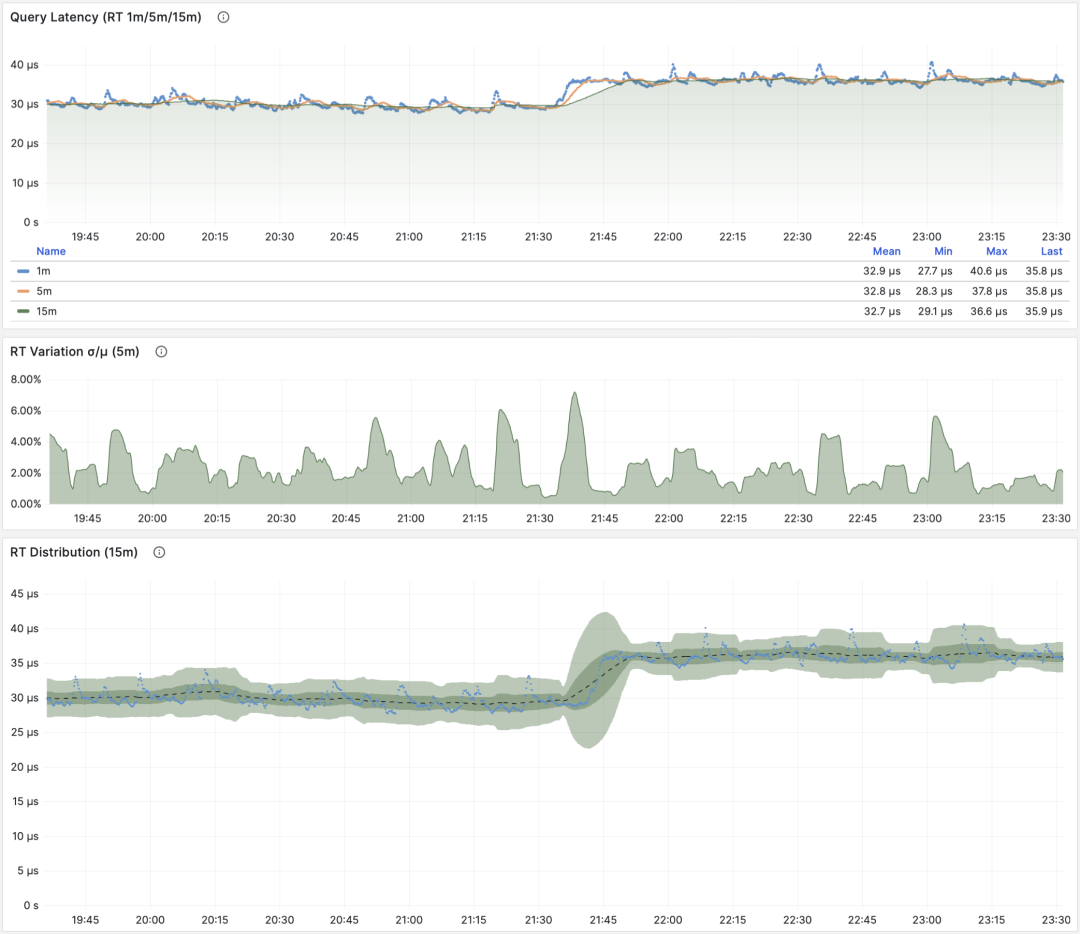

For example, when calculating QPS, we calculate QPS for the last 1 minute, 5 minutes, and 15 minutes respectively. Longer windows result in smoother curves, better reflecting long-term trends; but they hide short-term fluctuation details, making it harder to detect instant anomalies. Therefore, metrics of different granularities need to be considered together.

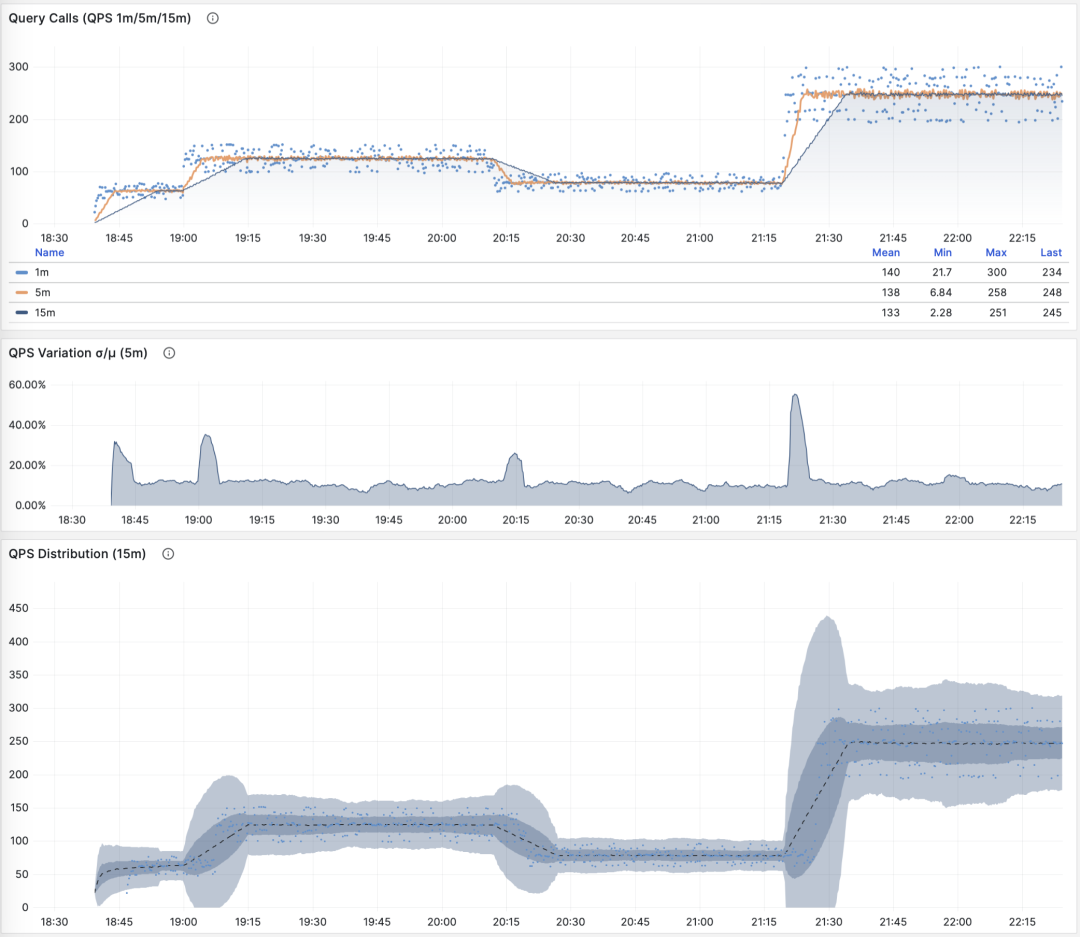

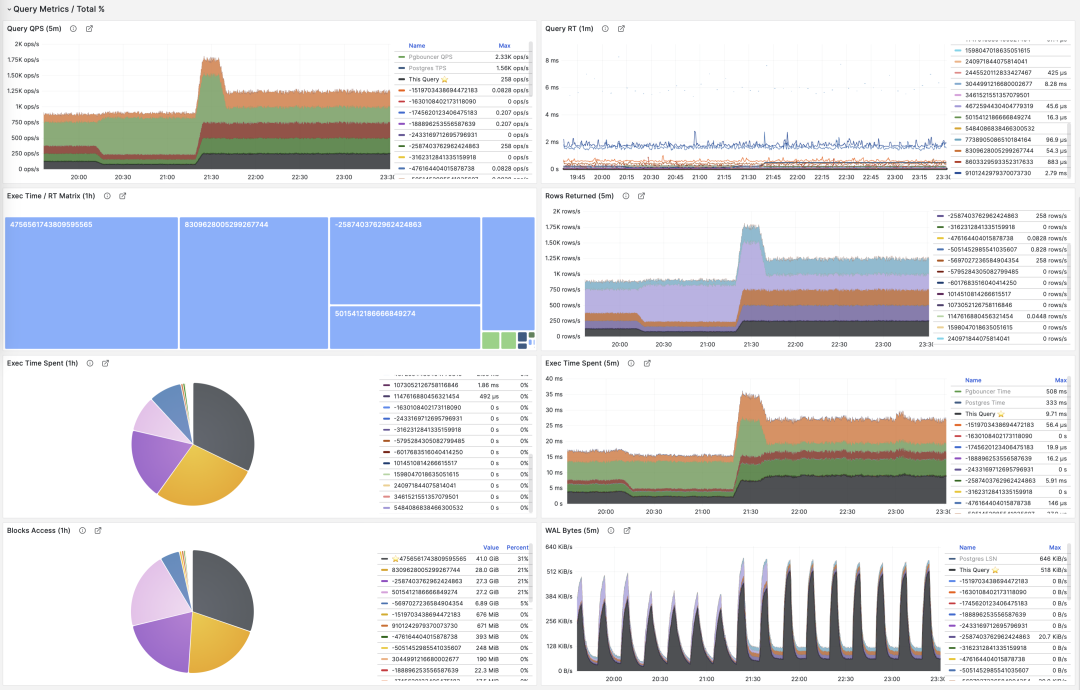

Showing QPS for a specific query group in 1/5/15 minute windows

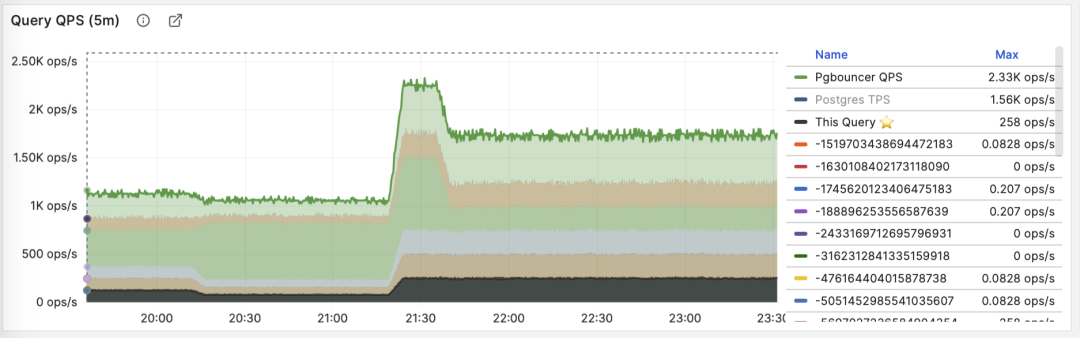

If you use Pigsty / Prometheus to collect monitoring data, you can easily perform these calculations using PromQL. For example, to calculate the QPS metric for all queries in the last minute, you can use: rate(pg_query_calls{}[1m])

QPS

When M is calls, the time derivative is QPS, with units of queries per second (req/s). This is a very fundamental metric. Query QPS is a throughput metric that directly reflects the load imposed by the business. If a query’s throughput is too high (e.g., 10000+) or too low (e.g., 1-), it might be worth attention.

QPS: 1/5/15 minute µ/CV, ±1/3σ distribution

If we sum up the QPS metrics of all query groups (and haven’t exceeded PGSS’s collection range), we get the so-called “global QPS”. Another way to obtain global QPS is through client-side instrumentation, collection at connection pool middleware like Pgbouncer, or using ebpf probes. But none are as convenient as PGSS.

Note that QPS metrics don’t have horizontal comparability in terms of load. Different query groups may have the same QPS, while individual query execution times may vary dramatically. Even the same query group may produce vastly different load levels at different time points due to execution plan changes. Execution time per second is a better metric for measuring load.

Execution Time Per Second

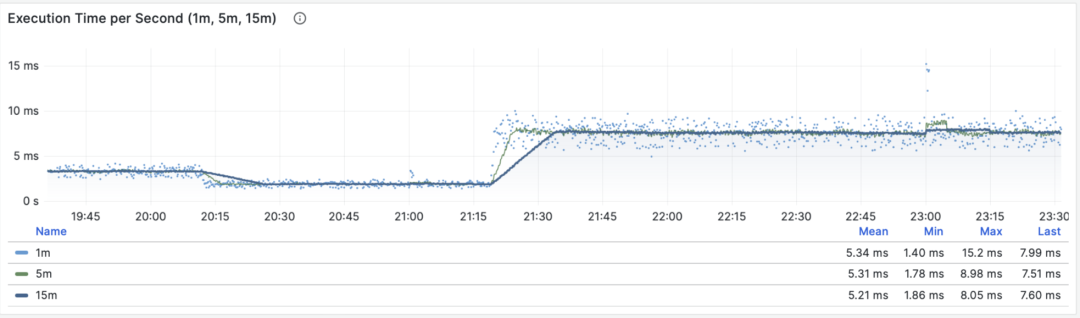

When M is total_exec_time (+ total_plan_time, optional), we get one of the most important metrics in macro optimization: execution time spent on the query group. Interestingly, the units of this derivative are seconds per second, so the numerator and denominator cancel out, making it actually a dimensionless metric.

This metric’s meaning is: how many seconds per second the server spends processing queries in this group. For example, 2 s/s means the server spends two seconds of execution time per second on this group of queries; for multi-core CPUs, this is certainly possible: just use all the time of two CPU cores.

Execution time per second: 1/5/15 minute mean

Therefore, this value can also be understood as a percentage: it can exceed 100%. From this perspective, it’s a metric similar to host load1, load5, load15, revealing the load level produced by this query group. If divided by the number of CPU cores, we can even get a normalized query load contribution metric.

However, we need to note that execution time includes time spent waiting for locks and I/O. So it’s indeed possible that a query has a long execution time but doesn’t impact CPU load. Therefore, for detailed analysis of slow queries, we need to further analyze with reference to wait events.

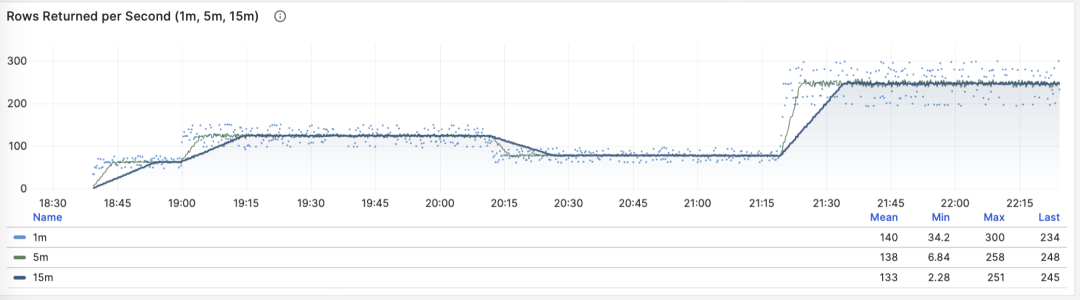

Rows Per Second

When M is rows, we get the number of rows returned per second by this query group, with units of rows per second (rows/s). For example, 10000 rows/s means this type of query returns 10,000 rows of data to the client per second. Returned rows consume client processing resources, making this a very valuable reference metric when we need to examine application client data processing pressure.

Rows returned per second: 1/5/15 minute mean

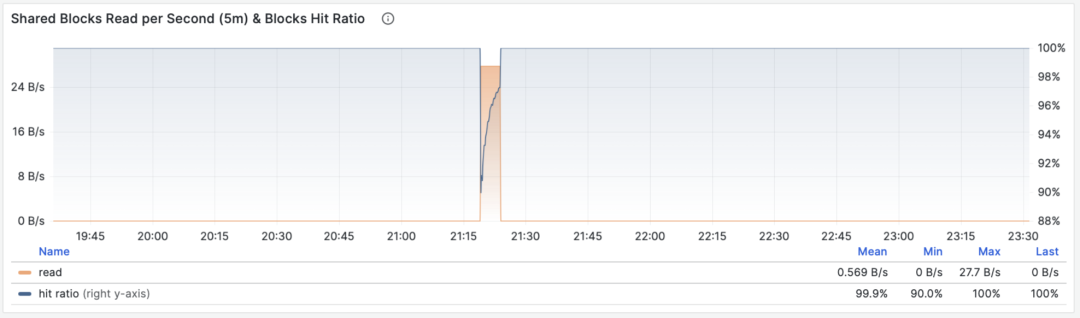

Shared Buffer Access Bandwidth

When M is shared_blks_hit + shared_blks_read, we get the number of shared buffer blocks hit/read per second. If we multiply this by the default block size of 8KiB (rarely might be other sizes, e.g., 32KiB), we get the bandwidth of a query type “accessing” memory/disk: units are bytes per second.

For example, if a certain query type accesses 500,000 shared buffer blocks per second, equivalent to 3.8 GiB/s of internal access data flow: then this is a significant load, and might be a good candidate for optimization. You should probably check this query to see if it deserves these “resource consumption”.

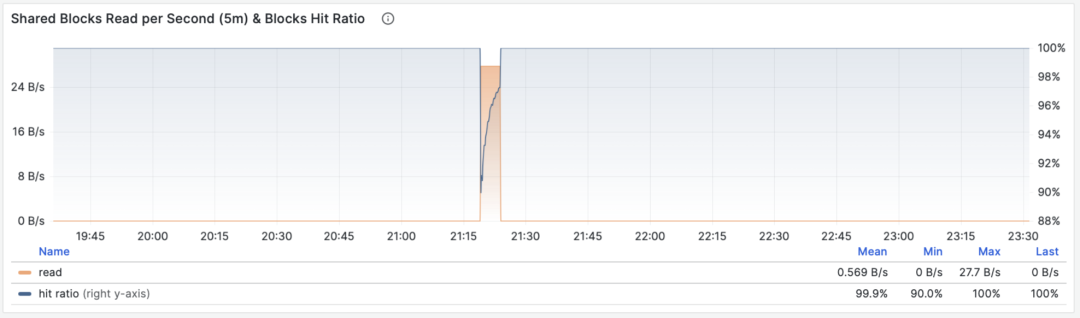

Shared buffer access bandwidth and buffer hit rate

Another valuable derived metric is buffer hit rate: hit / (hit + read), which can be used to analyze possible causes of performance changes — cache misses. Of course, repeated access to the same shared buffer pool block doesn’t actually result in a new read, and even if it does read, it might not be from disk but from memory in FS Cache. So this is just a reference value, but it is indeed a very important macro query optimization reference metric.

WAL Log Volume

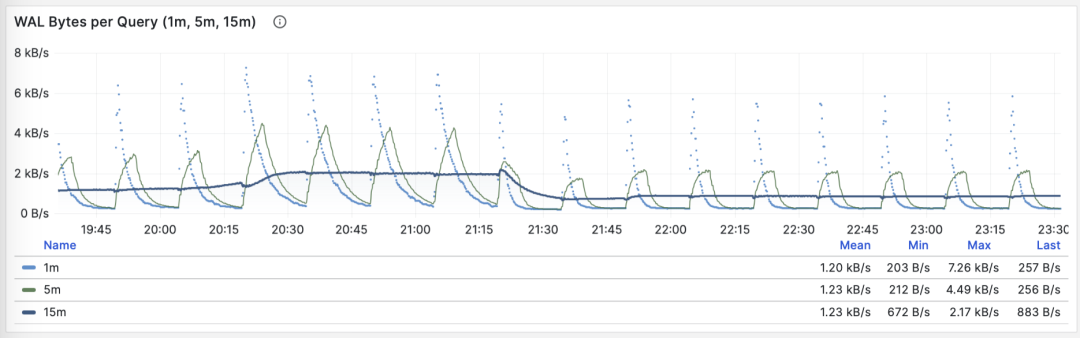

When M is wal_bytes, we get the rate at which this query generates WAL, with units of bytes per second (B/s). This metric was newly introduced in PostgreSQL 13 and can be used to quantitatively reveal the WAL size generated by queries: the more and faster WAL is written, the greater the pressure on disk flushing, physical/logical replication, and log archiving.

A typical example is: BEGIN; DELETE FROM xxx; ROLLBACK;. Such a transaction deletes a lot of data, generates a large amount of WAL, but performs no useful work. This metric can help identify such cases.

WAL bytes per second: 1/5/15 minute mean

There are two things to note here: As mentioned above, PGSS cannot track failed statements, but here the transaction was ROLLBACKed, but the statements were successfully executed, so they are tracked by PGSS.

The second thing is: in PostgreSQL, not only INSERT/UPDATE/DELETE operations generate WAL logs, SELECT operations might also generate WAL logs, because SELECT might modify tuple marks (Hint Bit) causing page checksums to change, triggering WAL log writes.

There’s even the possibility that if the read load is very large, it might have a higher probability of causing FPI image generation, producing considerable WAL log volume. You can check this further through the wal_fpi metric.

Shared buffer dirty/write-back bandwidth

For versions below 13, shared buffer dirty/write-back bandwidth metrics can serve as approximate alternatives for analyzing write load characteristics of query groups.

I/O Time

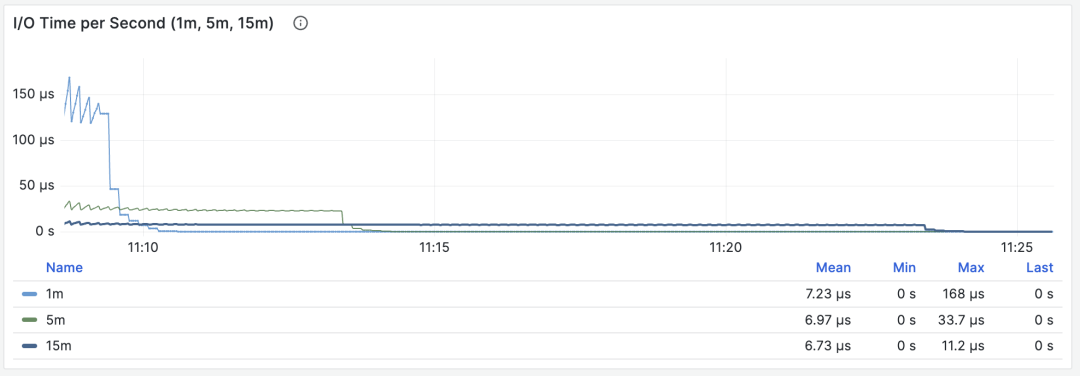

When M is blks_read_time + blks_write_time, we get the proportion of time spent on block I/O by the query group, with units of “seconds per second”, same as the execution time per second metric, it also reflects the proportion of time occupied by such operations.

I/O time is helpful for analyzing query spike causes

Because PostgreSQL uses the operating system’s FS Cache, even if block reads/writes are performed here, they might still be buffer operations at the filesystem level. So this can only be used as a reference metric, requiring careful use and comparison with disk I/O monitoring on the host node.

Time derivative metrics dM/dt can reveal the complete picture of workload within a database instance/cluster, especially useful for scenarios aiming to optimize resource usage. But if your optimization goal is to improve user experience, then another set of metrics — call derivatives dM/dc — might be more relevant.

Call Derivatives

Above we’ve calculated time derivatives for six important metrics. Another type of derived metric calculates derivatives with respect to “call count”, where the denominator changes from time difference to QPS.

This type of metric is even more important than the former, as it provides several core metrics directly related to user experience, such as the most important — Query Response Time (RT), or Latency.

The calculation of these metrics is also simple:

- Calculate the difference in metric value M between two snapshots: M2 - M1

- Then calculate the difference in calls between two snapshots: c2 - c1

- Finally calculate (M2 - M1) / (c2 - c1)

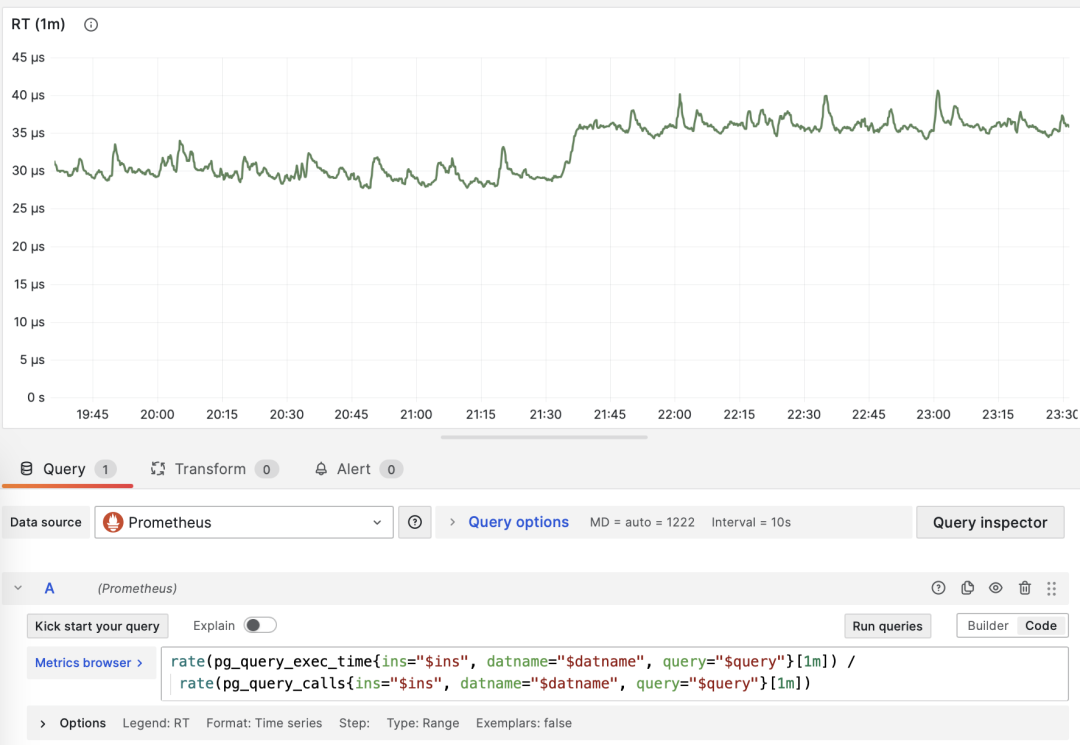

For PromQL implementation, call derivative metrics dM/dc can be calculated from “time derivative metrics dM/dt”. For example, to calculate RT, you can use execution time per second / queries per second, dividing the two metrics:

rate(pg_query_exec_time{}[1m]) / rate(pg_query_calls{}[1m])

dM/dt can be used to calculate dM/dc

Call Count

When M is calls, taking its own derivative is meaningless (result will always be 1).

Average Latency/Response Time/RT

When M is total_exec_time, the call derivative is RT, or response time/latency. Its unit is seconds (s). RT directly reflects user experience and is the most important metric in macro performance analysis. This metric’s meaning is: the average query response time of this query group on the server. If conditions allow enabling pg_stat_statements.track_planning, you can also add total_plan_time to the calculation for more precise and representative results.

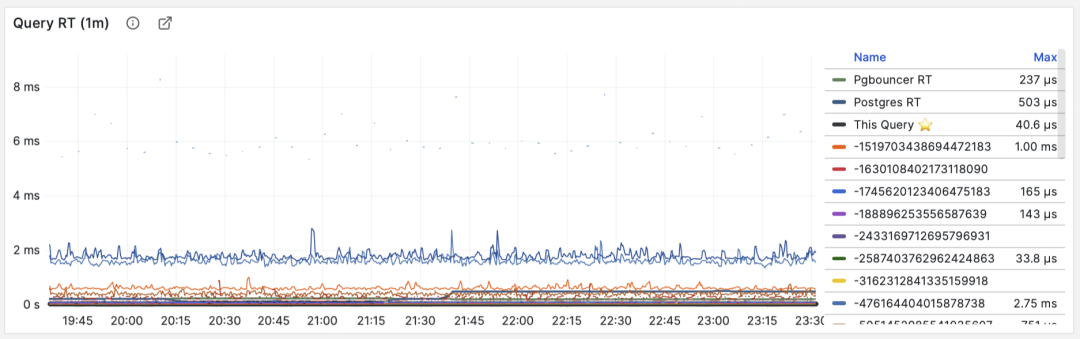

RT: statement level/connection pool level/database level

Unlike throughput metrics like QPS, RT has horizontal comparability: for example, if a query group’s RT is normally within 1 millisecond, then events exceeding 10ms should be considered serious deviations for analysis.

When failures occur, RT views are also helpful for root cause analysis: if all queries’ overall RT slows down, it’s most likely related to insufficient resources. If only specific query groups’ RT changes, it’s more likely that some slow queries are causing problems and should be further investigated. If RT changes coincide with application deployment, you should consider rolling back these deployments.

Moreover, in performance analysis, stress testing, and benchmarking, RT is the most important metric. You can evaluate system performance by comparing typical queries’ latency performance in different environments (e.g., different PG versions, hardware, configuration parameters) and use this as a basis for continuous system performance adjustment and improvement.

RT is so important that RT itself spawns many downstream metrics: 1-minute/5-minute/15-minute means µ and standard deviations σ are naturally essential; past 15 minutes’ ±σ, ±3σ can be used to measure RT fluctuation range, and past 1 hour’s 95th, 99th percentiles are also valuable references.

RT is the core metric for evaluating OLTP workloads, and its importance cannot be overemphasized.

Average Rows Returned

When M is rows, we get the average rows returned per query, with units of rows per query. For OLTP workloads, typical query patterns are point queries, returning a few rows of data per query.

Querying single record by primary key, average rows returned stable at 1

If a query group returns hundreds or even thousands of rows to the client per query, it should be examined. If this is by design, like batch loading tasks/data dumps, then no action is needed. If this is initiated by the application/client, there might be errors, such as statements missing LIMIT restrictions, queries lacking pagination design. Such queries should be adjusted and fixed.

Average Shared Buffer Reads/Hits

When M is shared_blks_hit + shared_blks_read, we get the average number of shared buffer “hits” and “reads” per query. If we multiply this by the default block size of 8KiB, we get this query type’s “bandwidth” per execution, with units of B/s: how many MB of data does each query access/read on average?

Querying single record by primary key, average rows returned stable at 1

The average data accessed by a query typically matches the average rows returned. If your query returns only a few rows on average but accesses megabytes or gigabytes of data blocks, you need to pay special attention: such queries are very sensitive to data hot/cold state. If all blocks are in the buffer, its performance might be acceptable, but if starting cold from disk, execution time might change dramatically.

Of course, don’t forget PostgreSQL’s double caching issue. The so-called “read” data might have already been cached once at the operating system filesystem level. So you need to cross-reference with operating system monitoring metrics, or system views like pg_stat_kcache, pg_stat_io for analysis.

Another pattern worth attention is sudden changes in this metric, which usually means the query group’s execution plan might have flipped/degraded, very worthy of attention and further research.

Average WAL Log Volume

When M is wal_bytes, we get the average WAL size generated per query, a field newly introduced in PostgreSQL 13. This metric can measure a query’s change footprint size and calculate important evaluation parameters like read/write ratios.

Stable QPS with periodic WAL fluctuations, can infer FPI influence

Another use is optimizing checkpoints/Checkpoint: if you observe periodic fluctuations in this metric (period approximately equal to checkpoint_timeout), you can optimize the amount of WAL generated by queries by adjusting checkpoint spacing.

Call derivative metrics dM/dc can reveal a query type’s workload characteristics, very useful for optimizing user experience. Especially RT is the golden metric for performance optimization, and its importance cannot be overemphasized.

dM/dc metrics provide us with important absolute value metrics, but to find which queries have the greatest potential optimization benefits, we also need %M percentage metrics.

Percentage Metrics

Now let’s examine the third type of metric, percentage metrics. These show the proportion of a query group relative to the overall workload.

Percentage metrics M% provide us with a query group’s proportion relative to the overall workload, helping us identify “major contributors” in terms of frequency, time, I/O time/count, and find query groups with the greatest potential optimization benefits as important criteria for priority assessment.

Common percentage metrics %M overview

For example, if a query group has an absolute value of 1000 QPS, it might seem significant; but if it only accounts for 3% of the entire workload, then the benefits and priority of optimizing this query aren’t that high. Conversely, if it accounts for more than 50% of the entire workload — if you can optimize it, you can cut the instance’s throughput in half, making its optimization priority very high.

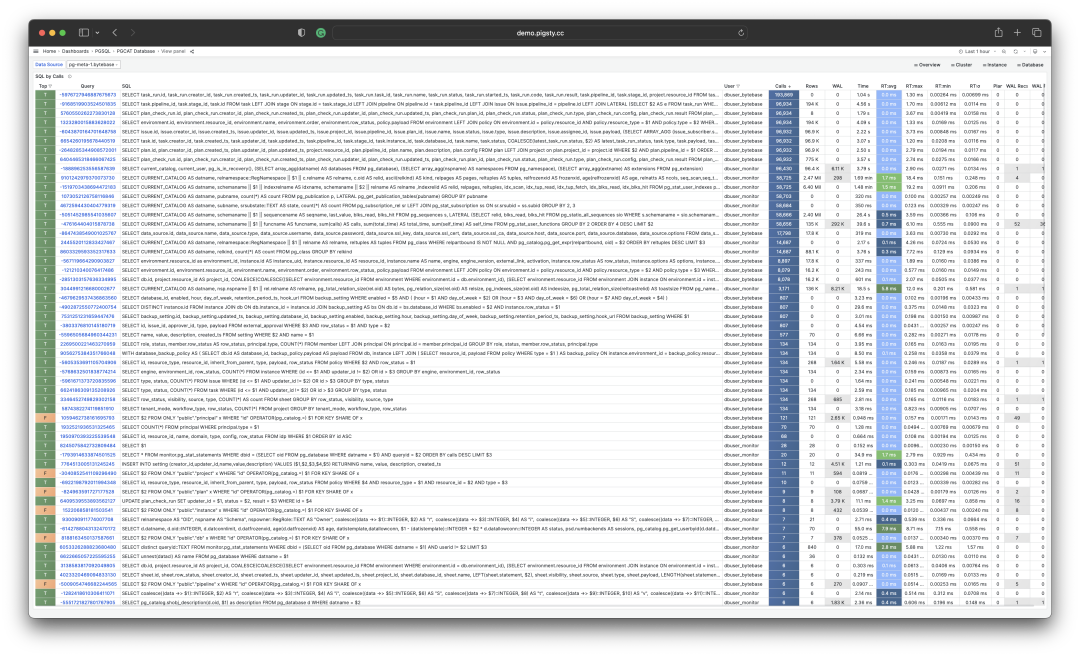

A common optimization strategy is: first sort all query groups by the important metrics mentioned above: calls, total_exec_time, rows, wal_bytes, shared_blks_hit + shared_blks_read, and blk_read_time + blk_write_time over a period of time’s dM/dt values, take TopN (e.g., N=10 or more), and add them to the optimization candidate list.

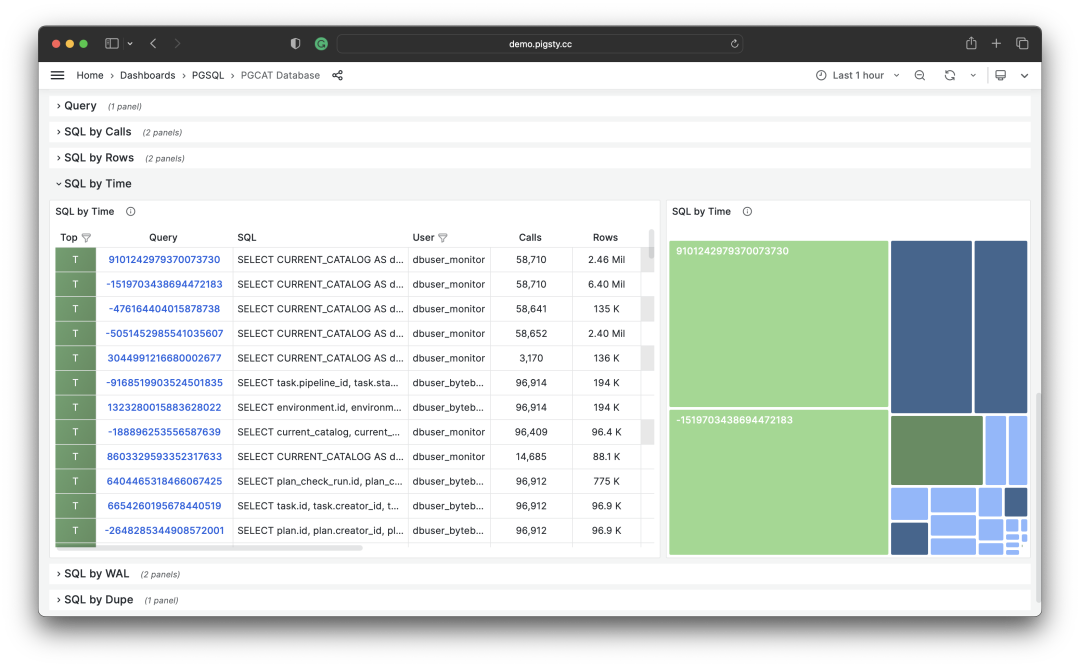

Selecting TopSQL for optimization based on specific criteria

Then, for each query group in the optimization candidate list, analyze its dM/dc metrics, combine with specific query statements and slow query logs/wait events for analysis, and decide if this is a query worth optimizing. For queries decided (Plan) to optimize, you can use the techniques to be introduced in the subsequent “Micro Optimization” article for tuning (Do), and use the monitoring system to evaluate optimization effects (Check). After summarizing and analyzing, enter the next PDCA Deming cycle, continuously managing and optimizing.

Besides taking TopN of metrics, visualization can also be used. Visualization is very helpful for identifying “major contributors” from the workload. Complex judgment algorithms might be far inferior to human DBAs’ intuition about monitoring graph patterns. To form a sense of proportion, we can use pie charts, tree maps, or stacked time series charts.

Stacking QPS of all query groups

For example, we can use pie charts to identify queries with the highest time/IO usage in the past hour, use 2D tree maps (size representing total time, color representing average RT) to show an additional dimension, and use stacked time series charts to show proportion changes over time.

We can also directly analyze the current PGSS snapshot, sort by different concerns, and select queries that need optimization according to your own criteria.

I/O time is helpful for analyzing query spike causes

Summary

Finally, let’s summarize the above content.

PGSS provides rich metrics, among which the most important cumulative metrics can be processed in three ways:

dM/dt: The time derivative of metric M, revealing resource usage per second, typically used for the objective of reducing resource consumption.

dM/dc: The call derivative of metric M, revealing resource usage per call, typically used for the objective of improving user experience.

%M: Percentage metrics showing a query group’s proportion in the entire workload, typically used for the objective of balancing workload.

Typically, we select high-value candidate queries for optimization based on %M: percentage metrics Top queries, and use dM/dt and dM/dc metrics for further evaluation, confirming if there’s optimization space and feasibility, and evaluating optimization effects. Repeat this process continuously.

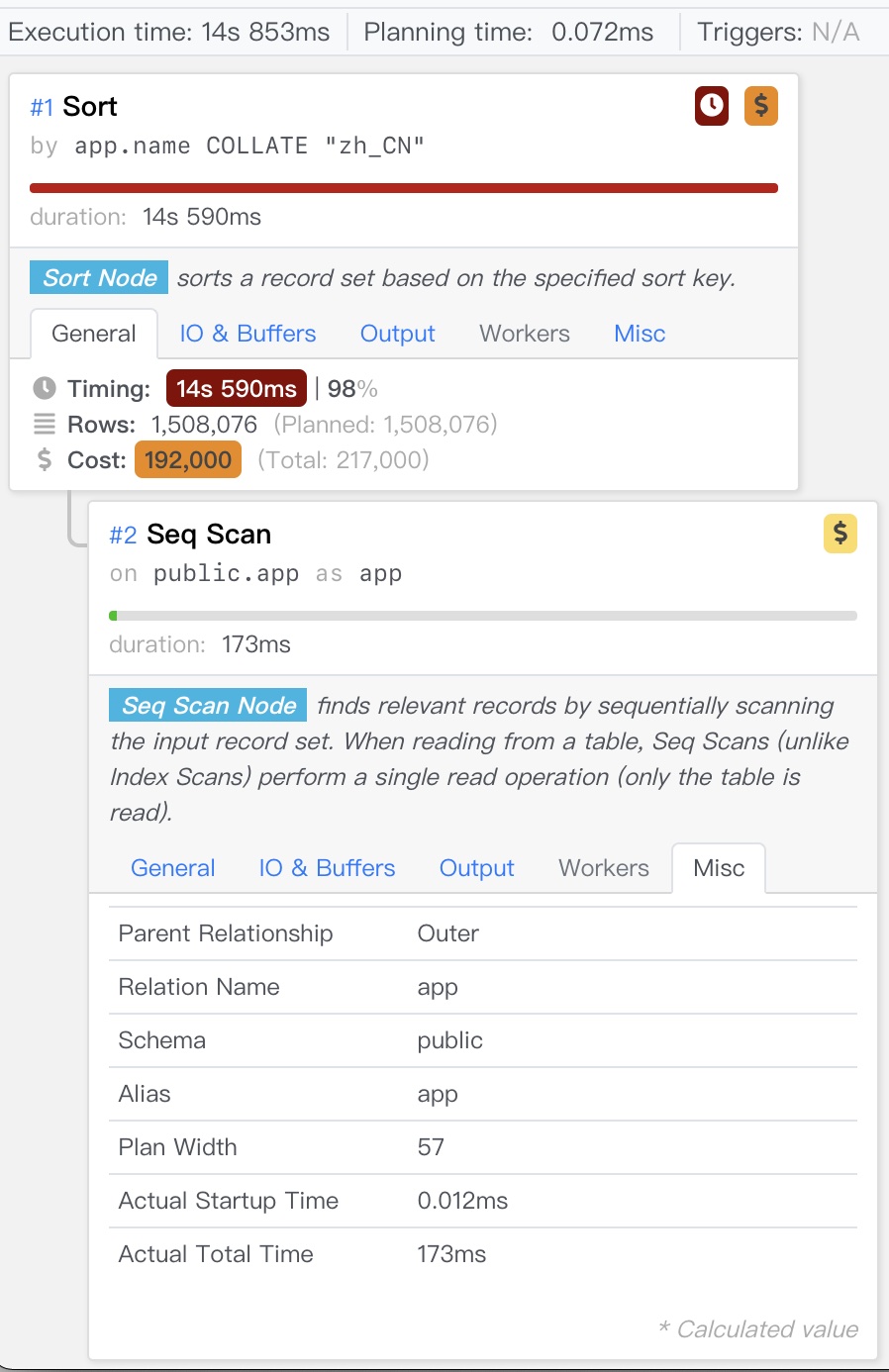

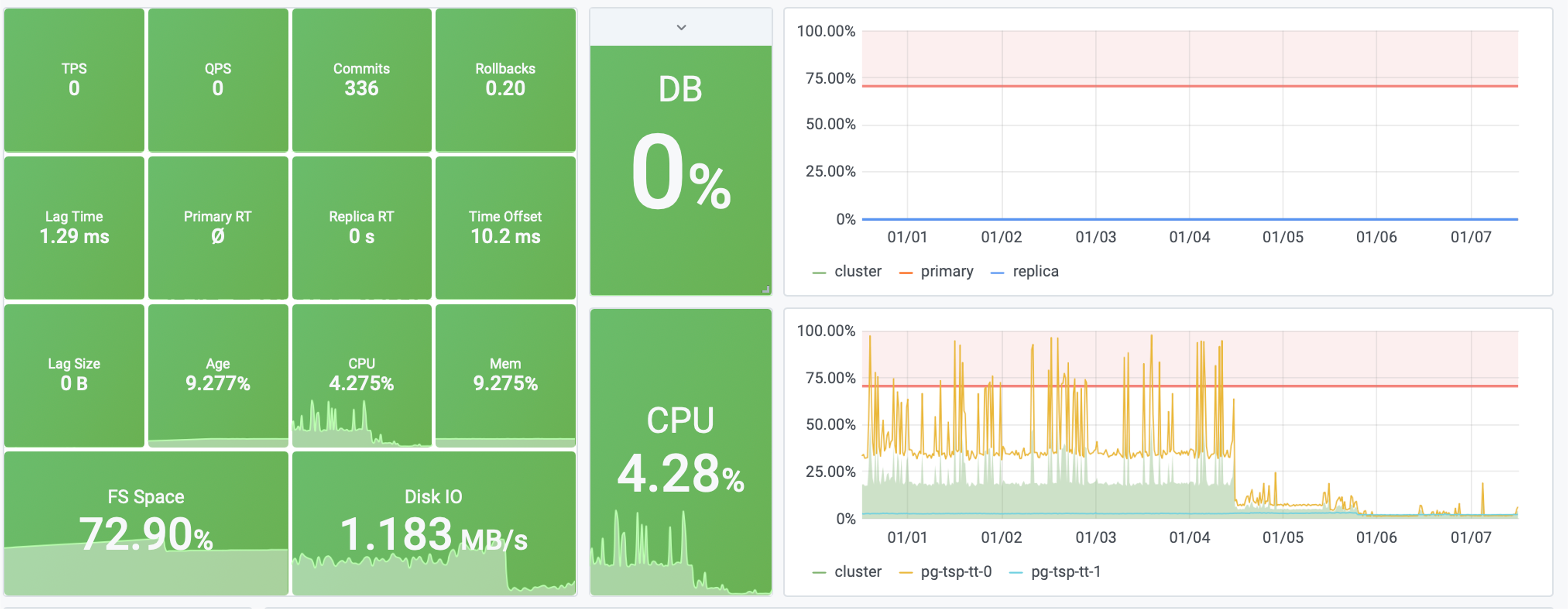

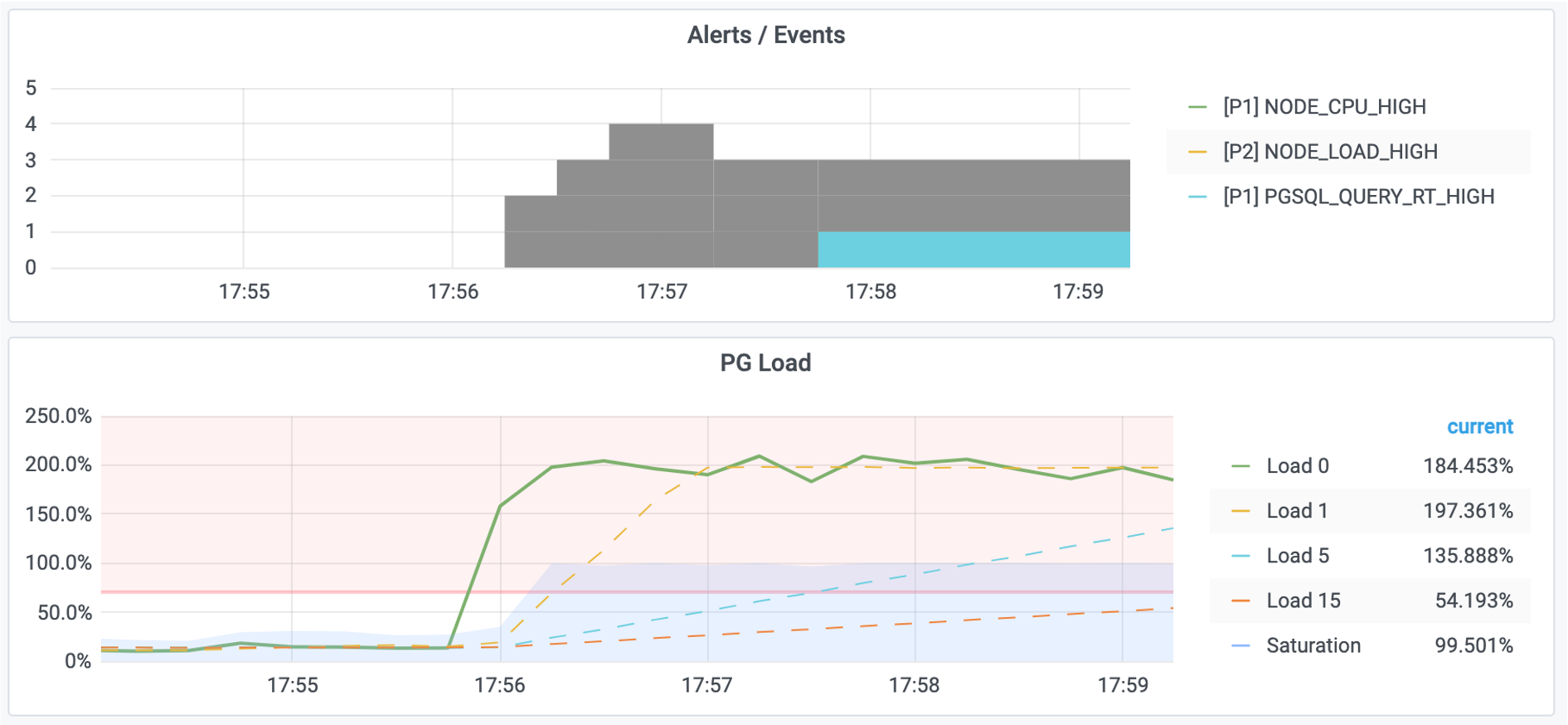

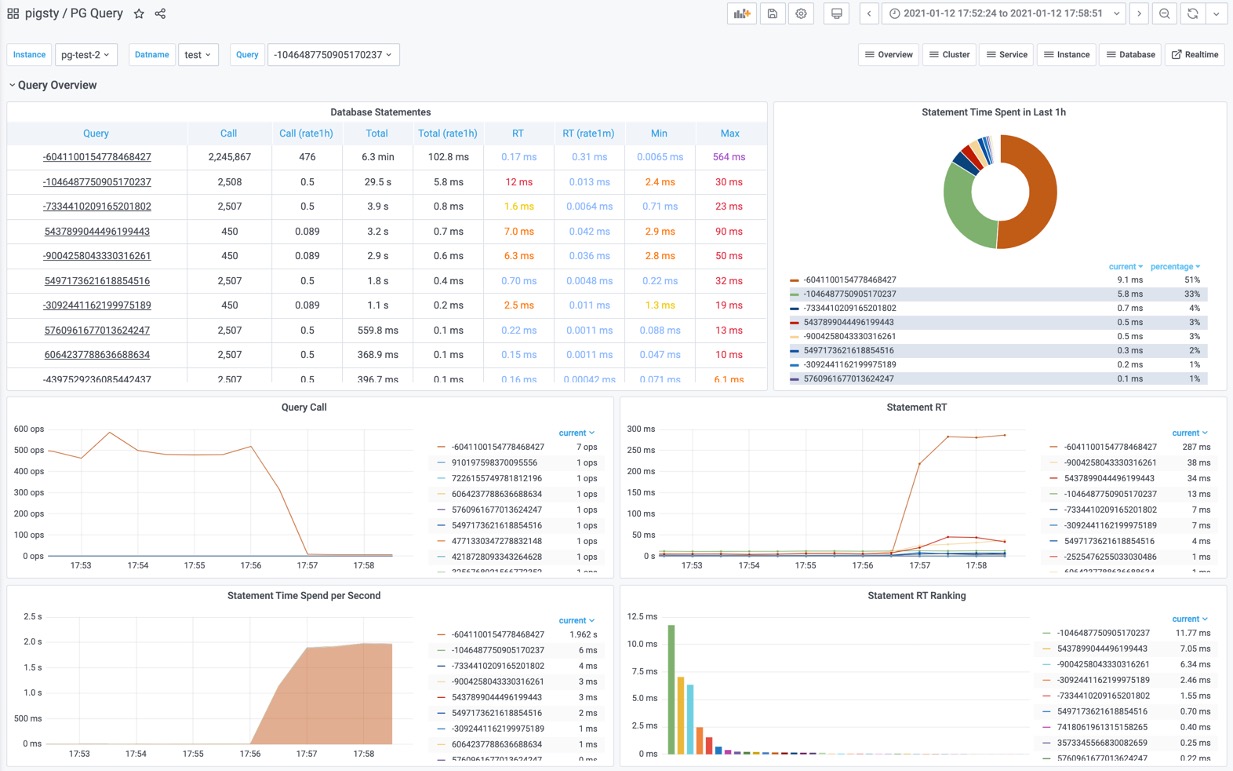

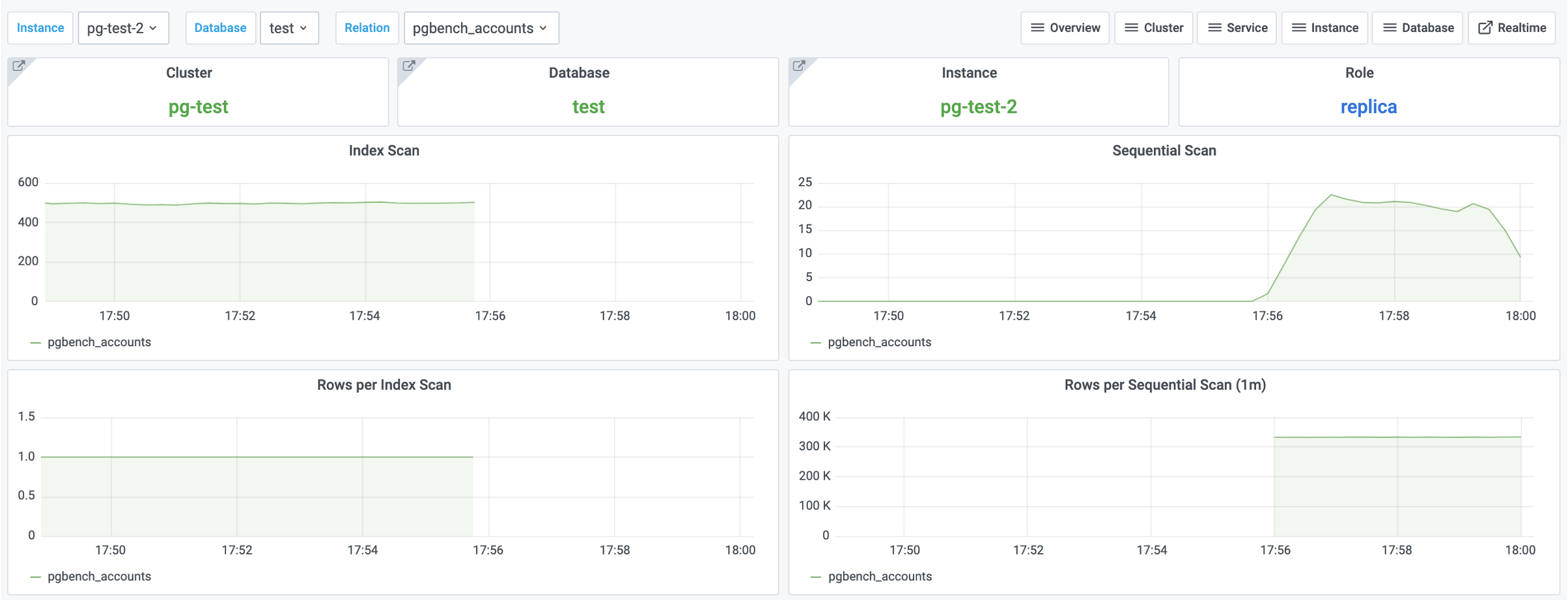

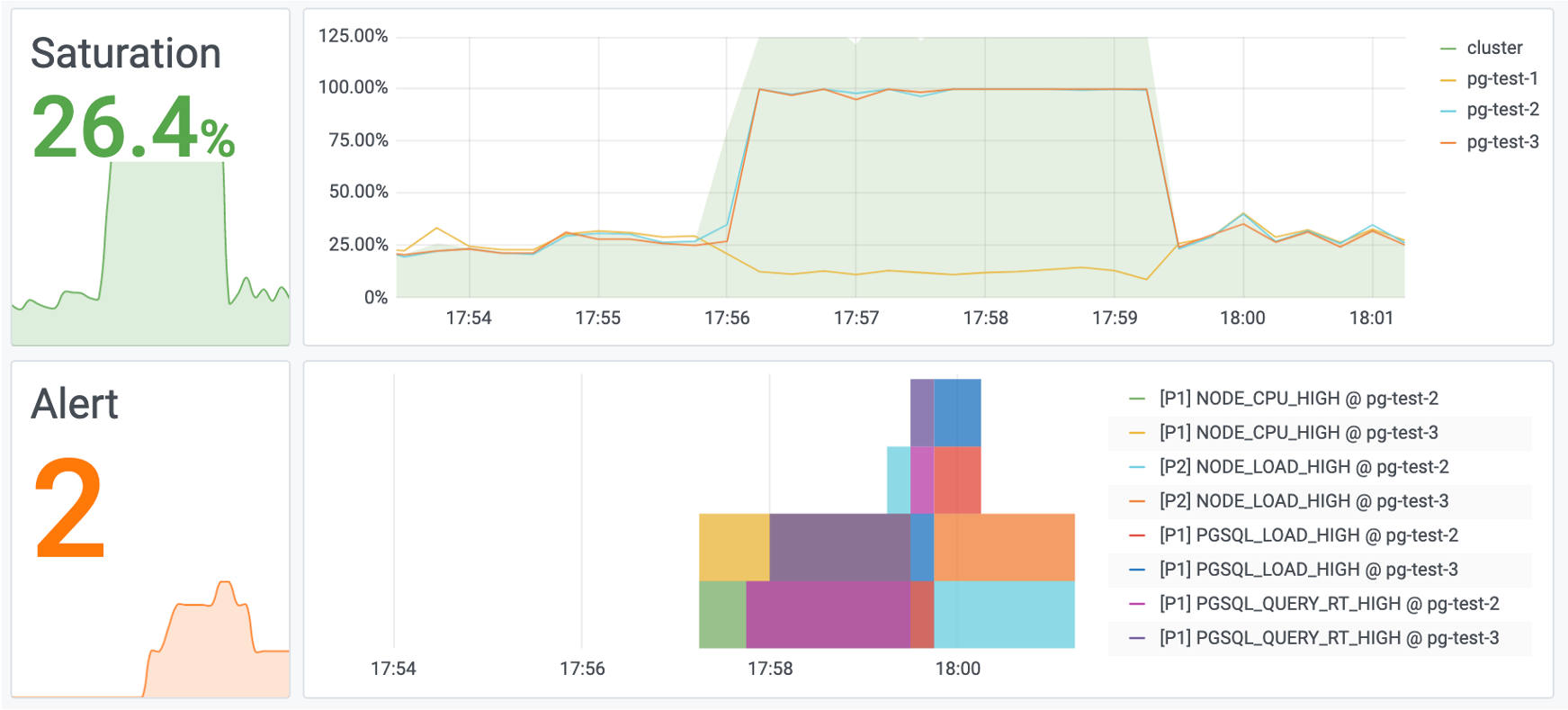

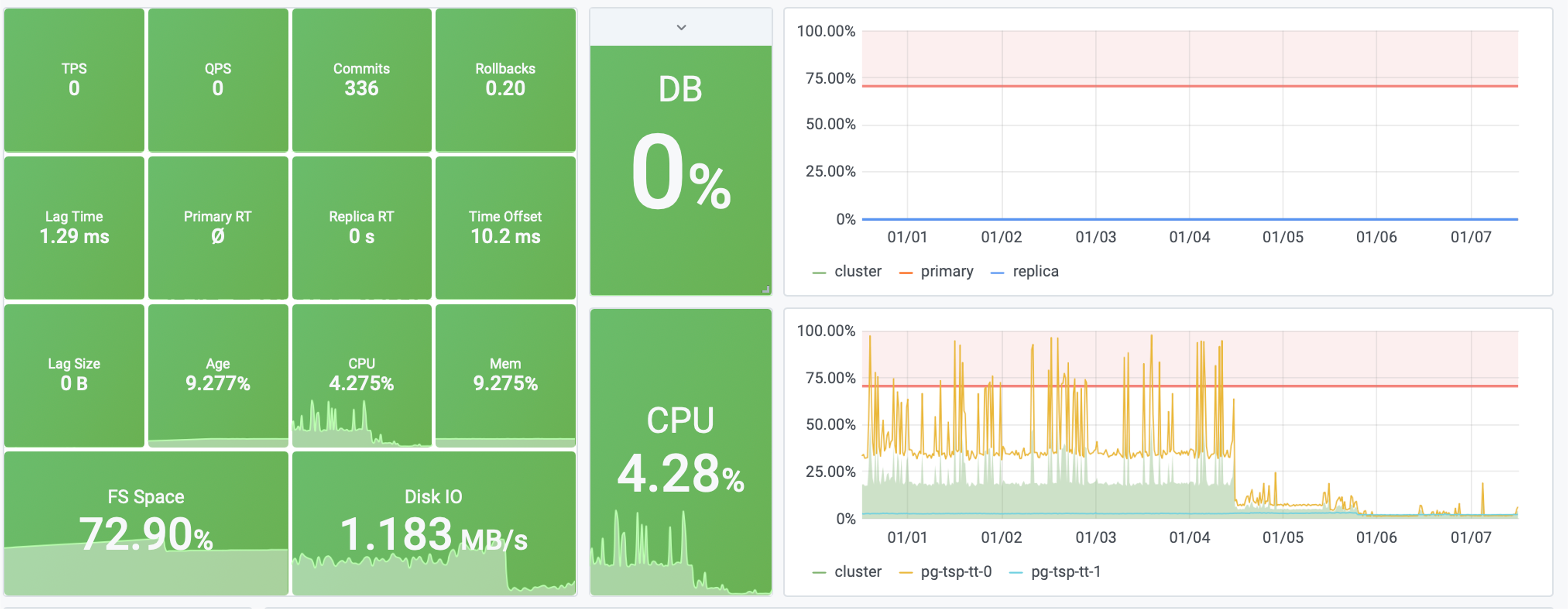

After understanding the methodology of macro optimization, we can use this approach to locate and optimize slow queries. Here’s a concrete example of Using Monitoring System to Diagnose PG Slow Queries. In the next article, we’ll introduce experience and techniques for PostgreSQL query micro optimization.

References

[1] PostgreSQL HowTO: pg_stat_statements by Nikolay Samokhvalov

[3] Using Monitoring System to Diagnose PG Slow Queries

[4] How to Monitor Existing PostgreSQL (RDS/PolarDB/On-prem) with Pigsty?

[5] Pigsty v2.5 Released: Ubuntu/Debian Support & Monitoring Revamp/New Extensions

Rescue Data with pg_filedump?

Backups are a DBA’s lifeline — but what if your PostgreSQL database has crashed without any backups? Maybe pg_filedump can help!

Recently, I encountered a rather challenging case. Here’s the situation: A user’s PostgreSQL database was corrupted. It was a Gitlab-managed PostgreSQL instance with no replicas, no backups, and no dumps. It was running on BCACHE (using SSD as transparent cache), and after a power outage, it wouldn’t start.

But that wasn’t the end of it. After several rounds of mishandling, it was completely wrecked: First, someone forgot to mount the BCACHE disk, causing Gitlab to reinitialize a new database cluster; then, due to various reasons, isolation failed, and two database processes ran on the same cluster directory, frying the data directory; next, someone ran pg_resetwal without parameters, pushing the database back to its origin point; finally, they let the empty database run for a while and then removed the temporary backup made before the corruption.

When I saw this case, I was speechless: How do you recover from this mess? It seemed like the only option was to extract data directly from the binary files. I suggested they try a data recovery company, and I asked around, but among the many data recovery companies, almost none offered PostgreSQL data recovery services. Those that did only handled basic issues, and for this situation, they all said it was a long shot.

Data recovery quotes are typically based on the number of files, ranging from ¥1000 to ¥5000 per file. With thousands of files in the Gitlab database, roughly 1000 tables, the total recovery cost could easily reach hundreds of thousands. But after a day, no one took the job, which made me think: If no one can handle this, doesn’t that make the PG community look bad?

I thought about it and decided: This job looks painful but also quite challenging and interesting. Let’s treat it as a dead horse and try to revive it — no cure, no pay. You never know until you try, right? So I took it on myself.

The Tool

To do a good job, one must first sharpen one’s tools. For data recovery, the first step is to find the right tool: pg_filedump is a great weapon. It can extract raw binary data from PostgreSQL data pages, handling many low-level tasks.

The tool can be compiled and installed with the classic make three-step process, but you need to have the corresponding major version of PostgreSQL installed first. Gitlab defaults to using PG 13, so ensure the corresponding version’s pg_config is in your path before compiling:

git clone https://github.com/df7cb/pg_filedump

cd pg_filedump && make && sudo make install

Using pg_filedump isn’t complicated. You feed it a data file and tell it the type of each column in the table, and it’ll interpret the data for you. For example, the first step is to find out which databases exist in the cluster. This information is stored in the system view pg_database. This is a system-level table located in the global directory, assigned a fixed OID 1262 during cluster initialization, so the corresponding physical file is typically: global/1262.

vonng=# select 'pg_database'::RegClass::OID;

oid

------

1262

This system view has many fields, but we mainly care about the first two: oid and datname. datname is the database name, and oid can be used to locate the database directory. We can use pg_filedump to extract this table and examine it. The -D parameter tells pg_filedump how to interpret the binary data for each row in the table. You can specify the type of each field, separated by commas, and use ~ to ignore the rest.

As you can see, each row of data starts with COPY. Here we found our target database gitlabhq_production with OID 16386. Therefore, all files for this database should be located in the base/16386 subdirectory.

Recovering the Data Dictionary

Knowing the directory of files to recover, the next step is to extract the data dictionary. There are four important tables to focus on:

•pg_class: Contains important metadata for all tables

•pg_namespace: Contains schema metadata

•pg_attribute: Contains all column definitions

•pg_type: Contains type names

Among these, pg_class is the most crucial and indispensable table. The other system views are nice to have: they make our work easier. So, we first attempt to recover this table.

pg_class is a database-level system view with a default OID = 1259, so the corresponding file for pg_class should be: base/16386/1259, in the gitlabhq_production database directory.

A side note: Those familiar with PostgreSQL internals know that while the actual underlying storage filename (RelFileNode) defaults to matching the table’s OID, some operations might change this. In such cases, you can use pg_filedump -m pg_filenode.map to parse the mapping file in the database directory and find the Filenode corresponding to OID 1259. Of course, here they match, so we’ll move on.

We parse its binary file based on the pg_class table structure definition (note: use the table structure for the corresponding PG major version):

pg_filedump -D 'oid,name,oid,oid,oid,oid,oid,oid,oid,int,real,int,oid,bool,bool,char,char,smallint,smallint,bool,bool,bool,bool,bool,bool,char,bool,oid,xid,xid,text,text,text' -i base/16386/1259

Then you can see the parsed data. The data here is single-line records separated by \t, in the same format as PostgreSQL COPY command’s default output. So you can use scripts to grep and filter, remove the COPY at the beginning of each line, and re-import it into a real database table for detailed examination.

When recovering data, there are many details to pay attention to, and the first one is: You need to handle deleted rows. How to identify them? Use the -i parameter to print each row’s metadata. The metadata includes an XMAX field. If a row was deleted by a transaction, this record’s XMAX will be set to that transaction’s XID. So if a row’s XMAX isn’t zero, it means this is a deleted record and shouldn’t be included in the final output.

Here XMAX indicates this is a deleted record

With the pg_class data dictionary, you can clearly find the OID correspondences for other tables, including system views. You can recover pg_namespace, pg_attribute, and pg_type using the same method. What can you do with these four tables?

You can use SQL to generate the input path for each table, automatically construct the type of each column as the -D parameter, and generate the schema for temporary result tables. In short, you can automate all the necessary tasks programmatically.

SELECT id, name, nspname, relname, nspid, attrs, fields, has_tough_type,

CASE WHEN toast_page > 0 THEN toast_name ELSE NULL END AS toast_name, relpages, reltuples, path

FROM

(

SELECT n.nspname || '.' || c.relname AS "name", n.nspname, c.relname, c.relnamespace AS nspid, c.oid AS id, c.reltoastrelid AS tid,

toast.relname AS toast_name, toast.relpages AS toast_page,

c.relpages, c.reltuples, 'data/base/16386/' || c.relfilenode::TEXT AS path

FROM meta.pg_class c

LEFT JOIN meta.pg_namespace n ON c.relnamespace = n.oid

, LATERAL (SELECT * FROM meta.pg_class t WHERE t.oid = c.reltoastrelid) toast

WHERE c.relkind = 'r' AND c.relpages > 0

AND c.relnamespace IN (2200, 35507, 35508)

ORDER BY c.relnamespace, c.relpages DESC

) z,

LATERAL ( SELECT string_agg(name,',') AS attrs,

string_agg(std_type,',') AS fields,

max(has_tough_type::INTEGER)::BOOLEAN AS has_tough_type

FROM meta.pg_columns WHERE relid = z.id ) AS columns;

Note that the data type names supported by pg_filedump -D parameter are strictly limited to standard names, so you must convert boolean to bool, INTEGER to int. If the data type you want to parse isn’t in the list below, you can first try using the TEXT type. For example, the INET type for IP addresses can be parsed using TEXT.

bigint bigserial bool char charN date float float4 float8 int json macaddr name numeric oid real serial smallint smallserial text time timestamp timestamptz timetz uuid varchar varcharN xid xml

But there are indeed other special cases that require additional processing, such as PostgreSQL’s ARRAY type, which we’ll cover in detail later.

Recovering a Regular Table

Recovering a regular data table isn’t fundamentally different from recovering a system catalog table: it’s just that catalog schemas and information are publicly standardized, while the schema of the database to be recovered might not be.

Gitlab is also a well-known open-source software, so finding its database schema definition isn’t difficult. If it’s a regular business system, you can spend more effort to reconstruct the original DDL from pg_catalog.

Once you know the DDL definition, you can use the data type of each column in the DDL to interpret the data in the binary file. Let’s use public.approval_merge_request_rules, a regular table in Gitlab, as an example to demonstrate how to recover such a regular data table.

create table approval_project_rules

(

id bigint,

created_at timestamp with time zone,

updated_at timestamp with time zone,

project_id integer,

approvals_required smallint,

name varchar,

rule_type smallint,

scanners text[],

vulnerabilities_allowed smallint,

severity_levels text[],

report_type smallint,

vulnerability_states text[],

orchestration_policy_idx smallint,

applies_to_all_protected_branches boolean,

security_orchestration_policy_configuration_id bigint,

scan_result_policy_id bigint

);

First, we need to convert these types into types that pg_filedump can recognize. This involves type mapping: if you have uncertain types, like the text[] string array fields above, you can first use text type as a placeholder, or simply use ~ to ignore them: